Parallel Distributional Prioritized Deep Reinforcement Learning for Unmanned Aerial Vehicles

Paper and Code

Sep 01, 2023

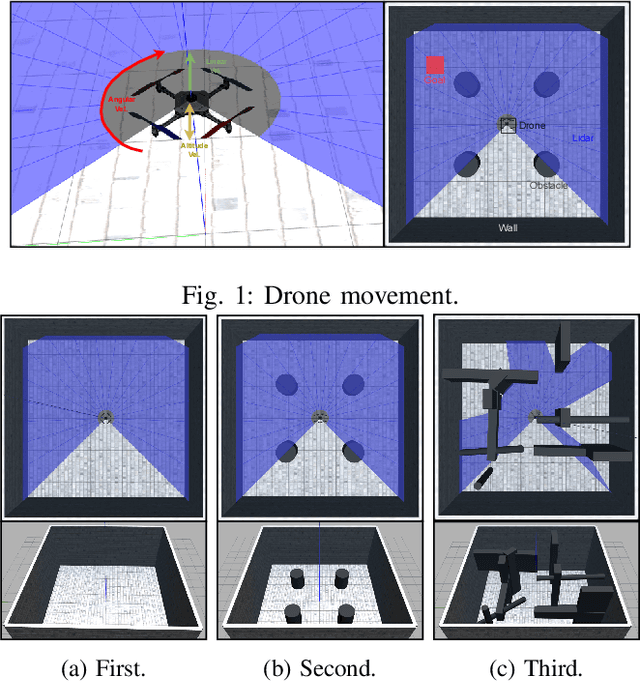

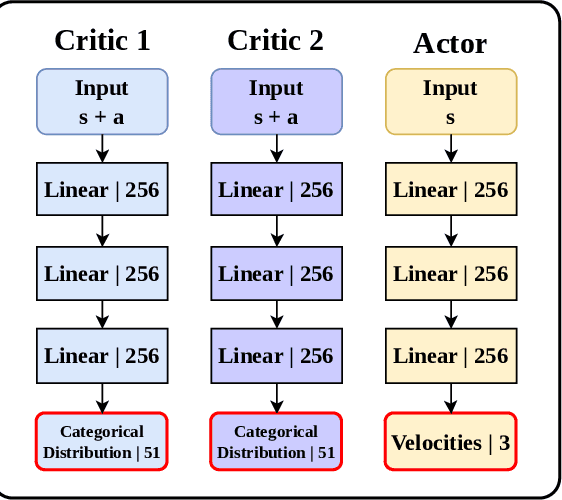

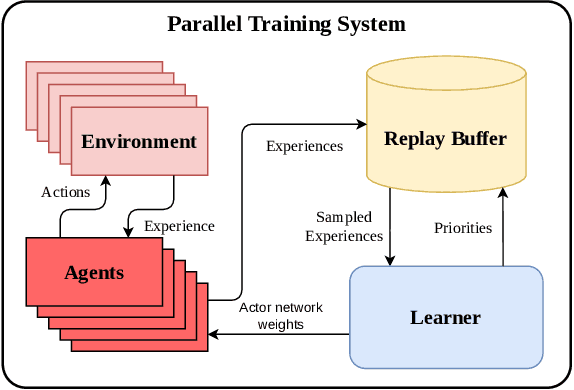

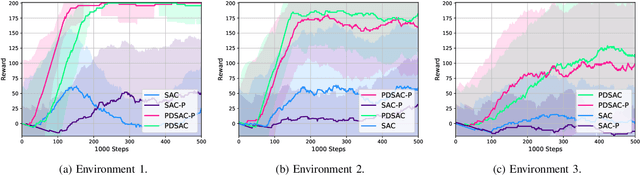

This work presents a study on parallel and distributional deep reinforcement learning applied to the mapless navigation of UAVs. For this, we developed an approach based on the Soft Actor-Critic method, producing a distributed and distributional variant named PDSAC, and compared it with a second one based on the traditional SAC algorithm. In addition, we also embodied a prioritized memory system into them. The UAV used in the study is based on the Hydrone vehicle, a hybrid quadrotor operating solely in the air. The inputs for the system are 23 range findings from a Lidar sensor and the distance and angles towards a desired goal, while the outputs consist of the linear, angular, and, altitude velocities. The methods were trained in environments of varying complexity, from obstacle-free environments to environments with multiple obstacles in three dimensions. The results obtained, demonstrate a concise improvement in the navigation capabilities by the proposed approach when compared to the agent based on the SAC for the same amount of training steps. In summary, this work presented a study on deep reinforcement learning applied to mapless navigation of drones in three dimensions, with promising results and potential applications in various contexts related to robotics and autonomous air navigation with distributed and distributional variants.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge