Overlapping Trace Norms in Multi-View Learning

Paper and Code

Apr 27, 2014

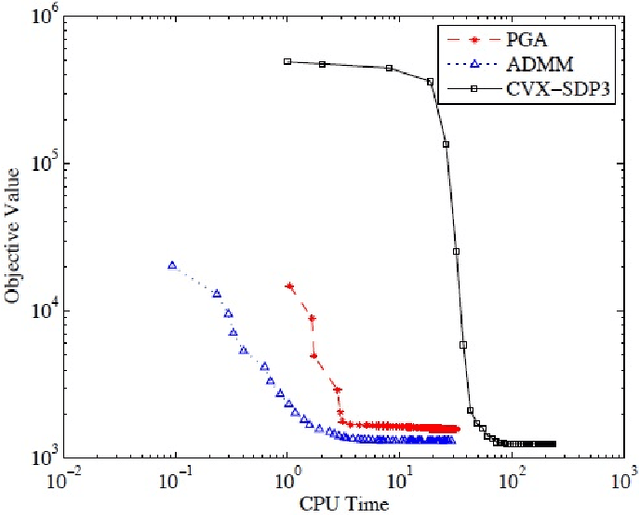

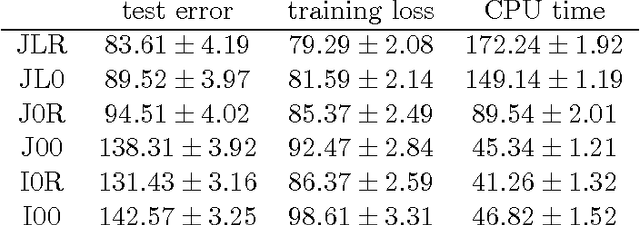

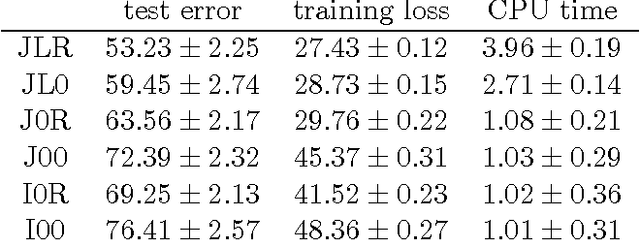

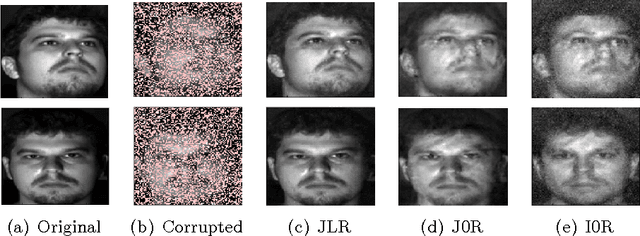

Multi-view learning leverages correlations between different sources of data to make predictions in one view based on observations in another view. A popular approach is to assume that, both, the correlations between the views and the view-specific covariances have a low-rank structure, leading to inter-battery factor analysis, a model closely related to canonical correlation analysis. We propose a convex relaxation of this model using structured norm regularization. Further, we extend the convex formulation to a robust version by adding an l1-penalized matrix to our estimator, similarly to convex robust PCA. We develop and compare scalable algorithms for several convex multi-view models. We show experimentally that the view-specific correlations are improving data imputation performances, as well as labeling accuracy in real-world multi-label prediction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge