Overcoming Negative Transfer: A Survey

Paper and Code

Sep 02, 2020

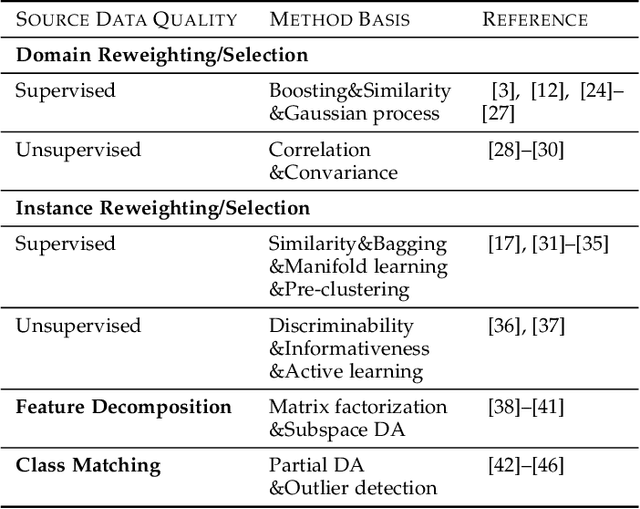

Transfer learning aims to help the target task with little or no training data by leveraging knowledge from one or multi-related auxiliary tasks. In practice, the success of transfer learning is not always guaranteed, negative transfer is a long-standing problem in transfer learning literature, which has been well recognized within the transfer learning community. How to overcome negative transfer has been studied for a long time and has raised increasing attention in recent years. Thus, it is both necessary and challenging to comprehensively review the relevant researches. This survey attempts to analyze the factors related to negative transfer and summarizes the theories and advances of overcoming negative transfer from four crucial aspects: source data quality, target data quality, domain divergence and generic algorithms, which may provide the readers an insight into the current research status and ideas. Additionally, we provided some general guidelines on how to detect and overcome negative transfer on real data, including the negative transfer detection, datasets, baselines, and general routines. The survey provides researchers a framework for better understanding and identifying the research status, fundamental questions, open challenges and future directions of the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge