Ordinal Regression as Structured Classification

Paper and Code

May 31, 2019

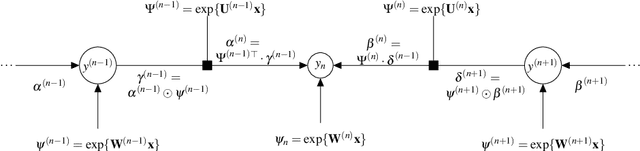

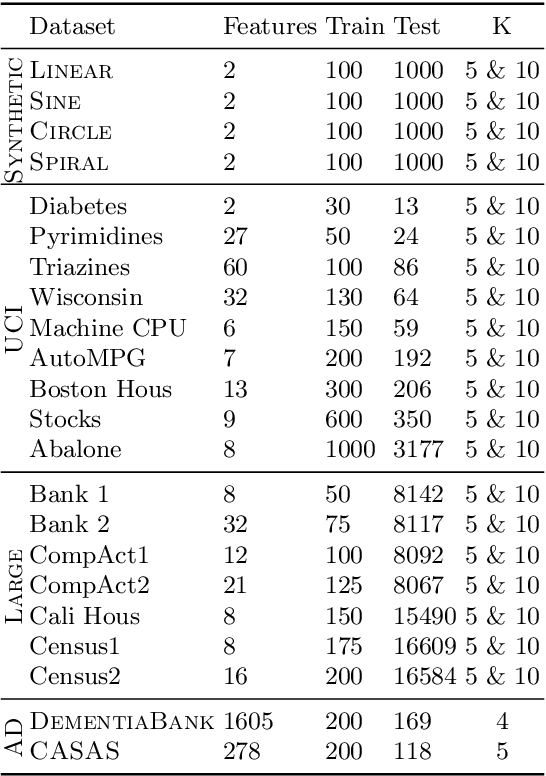

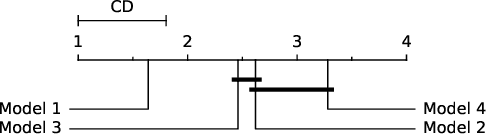

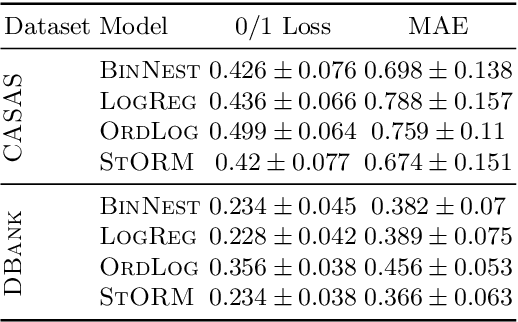

This paper extends the class of ordinal regression models with a structured interpretation of the problem by applying a novel treatment of encoded labels. The net effect of this is to transform the underlying problem from an ordinal regression task to a (structured) classification task which we solve with conditional random fields, thereby achieving a coherent and probabilistic model in which all model parameters are jointly learnt. Importantly, we show that although we have cast ordinal regression to classification, our method still fall within the class of decomposition methods in the ordinal regression ontology. This is an important link since our experience is that many applications of machine learning to healthcare ignores completely the important nature of the label ordering, and hence these approaches should considered naive in this ontology. We also show that our model is flexible both in how it adapts to data manifolds and in terms of the operations that are available for practitioner to execute. Our empirical evaluation demonstrates that the proposed approach overwhelmingly produces superior and often statistically significant results over baseline approaches on forty popular ordinal regression models, and demonstrate that the proposed model significantly out-performs baselines on synthetic and real datasets. Our implementation, together with scripts to reproduce the results of this work, will be available on a public GitHub repository.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge