OptLayer - Practical Constrained Optimization for Deep Reinforcement Learning in the Real World

Paper and Code

Feb 23, 2018

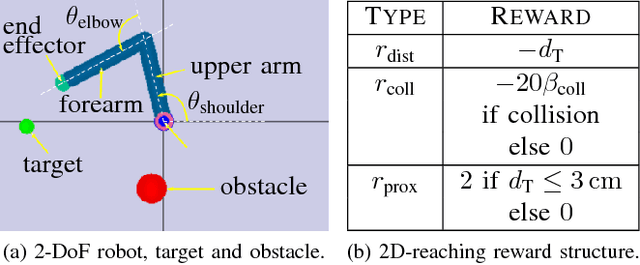

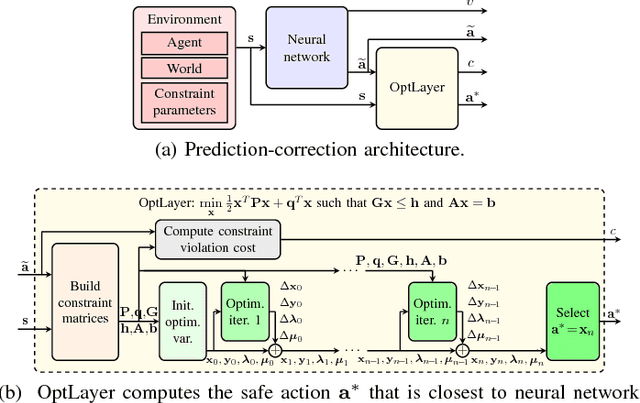

While deep reinforcement learning techniques have recently produced considerable achievements on many decision-making problems, their use in robotics has largely been limited to simulated worlds or restricted motions, since unconstrained trial-and-error interactions in the real world can have undesirable consequences for the robot or its environment. To overcome such limitations, we propose a novel reinforcement learning architecture, OptLayer, that takes as inputs possibly unsafe actions predicted by a neural network and outputs the closest actions that satisfy chosen constraints. While learning control policies often requires carefully crafted rewards and penalties while exploring the range of possible actions, OptLayer ensures that only safe actions are actually executed and unsafe predictions are penalized during training. We demonstrate the effectiveness of our approach on robot reaching tasks, both simulated and in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge