Optimization Fabrics for Behavioral Design

Paper and Code

Nov 04, 2020

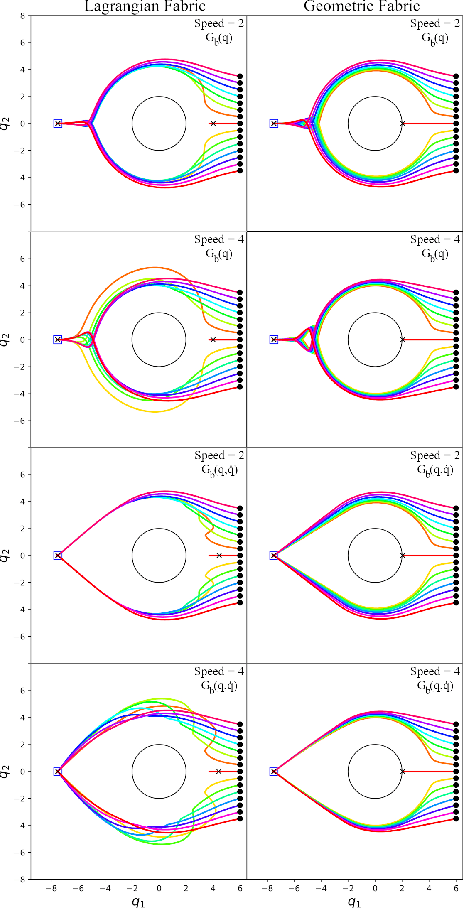

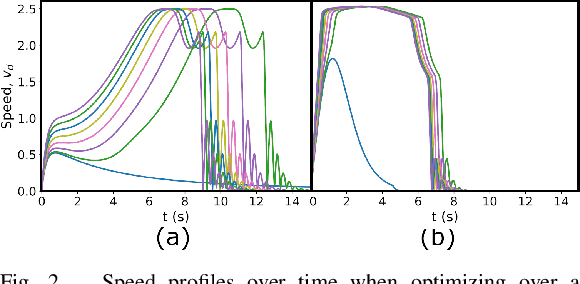

Second-order differential equations define smooth system behavior. In general, there is no guarantee that a system will behave well when forced by a potential function, but in some cases they do and may exhibit smooth optimization properties such as convergence to a local minimum of the potential. Such a property is desirable and inherently linked to asymptotic stability. This paper presents a comprehensive theory of optimization fabrics which are second-order differential equations that encode nominal behaviors on a space and are guaranteed to optimize when forced by a potential function. Optimization fabrics, or fabrics for short, can encode commonalities among optimization problems that reflect the structure of the space itself, enabling smooth optimization processes to intelligently navigate each problem even when the potential function is simple and relatively naive. Importantly, optimization over a fabric is asymptotically stable, so optimization fabrics constitute a building block for stable system design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge