Optimal Sample Complexity of Reinforcement Learning for Uniformly Ergodic Discounted Markov Decision Processes

Paper and Code

Feb 16, 2023

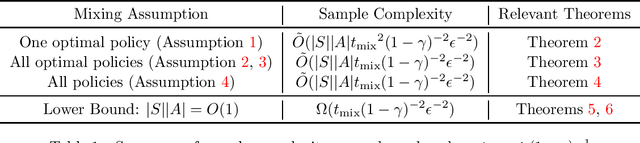

We consider the optimal sample complexity theory of tabular reinforcement learning (RL) for controlling the infinite horizon discounted reward in a Markov decision process (MDP). Optimal min-max complexity results have been developed for tabular RL in this setting, leading to a sample complexity dependence on $\gamma$ and $\epsilon$ of the form $\tilde \Theta((1-\gamma)^{-3}\epsilon^{-2})$, where $\gamma$ is the discount factor and $\epsilon$ is the tolerance solution error. However, in many applications of interest, the optimal policy (or all policies) will induce mixing. We show that in these settings the optimal min-max complexity is $\tilde \Theta(t_{\text{minorize}}(1-\gamma)^{-2}\epsilon^{-2})$, where $t_{\text{minorize}}$ is a measure of mixing that is within an equivalent factor of the total variation mixing time. Our analysis is based on regeneration-type ideas, that, we believe are of independent interest since they can be used to study related problems for general state space MDPs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge