Opponent Modelling with Local Information Variational Autoencoders

Paper and Code

Jun 16, 2020

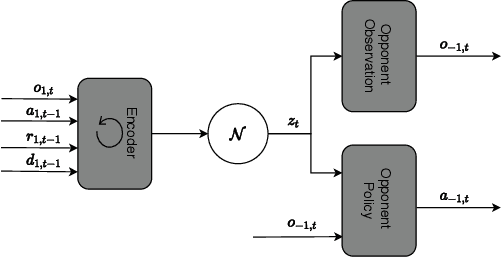

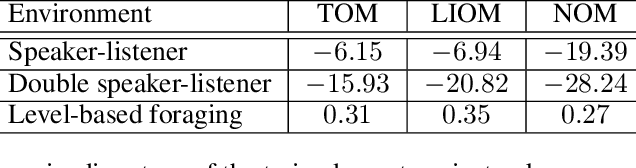

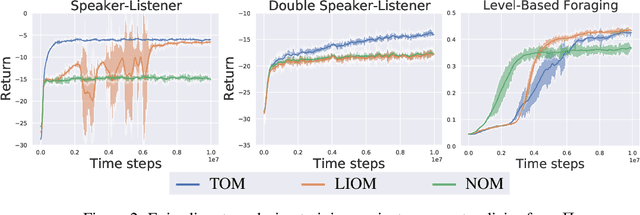

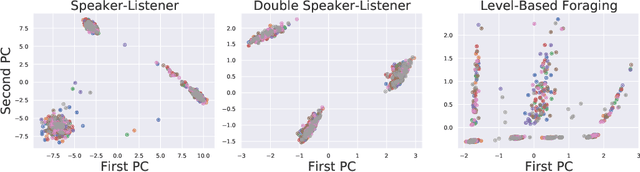

Modelling the behaviours of other agents (opponents) is essential for understanding how agents interact and making effective decisions. Existing methods for opponent modelling commonly assume knowledge of the local observations and chosen actions of the modelled opponents, which can significantly limit their applicability. We propose a new modelling technique based on variational autoencoders which uses only the local observations of the agent under control: its observed world state, chosen actions, and received rewards. The model is jointly trained with the agent's decision policy using deep reinforcement learning techniques. We provide a comprehensive evaluation and ablation study in diverse multi-agent tasks, showing that our method achieves significantly higher returns than a baseline method which does not use opponent modelling, and comparable performance to an ideal baseline which has full access to opponent information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge