Open-Set Recognition with Gaussian Mixture Variational Autoencoders

Paper and Code

Jun 03, 2020

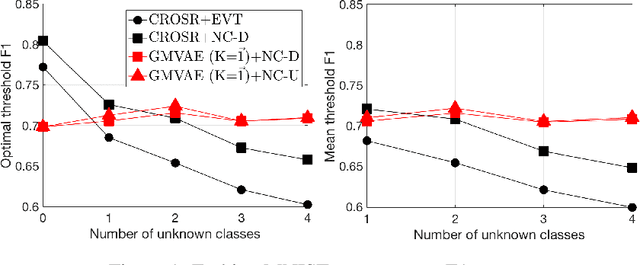

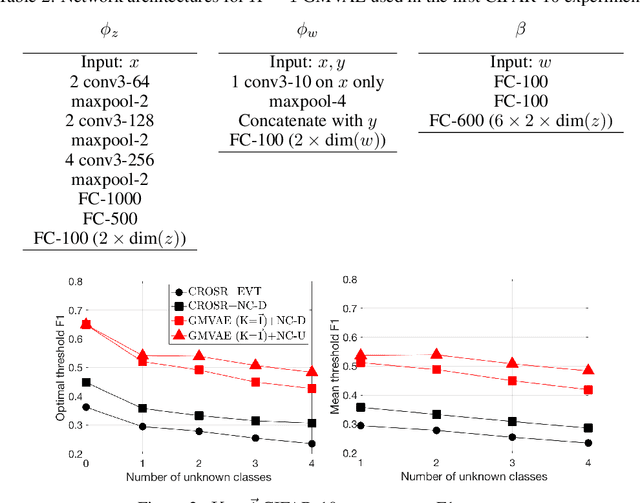

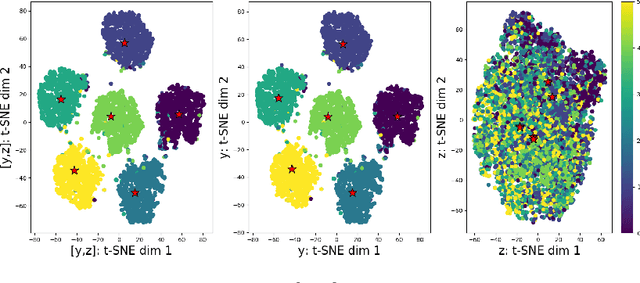

In inference, open-set classification is to either classify a sample into a known class from training or reject it as an unknown class. Existing deep open-set classifiers train explicit closed-set classifiers, in some cases disjointly utilizing reconstruction, which we find dilutes the latent representation's ability to distinguish unknown classes. In contrast, we train our model to cooperatively learn reconstruction and perform class-based clustering in the latent space. With this, our Gaussian mixture variational autoencoder (GMVAE) achieves more accurate and robust open-set classification results, with an average F1 improvement of 29.5%, through extensive experiments aided by analytical results.

* 12 pages including 8 figures and 4 tables, plus 6 pages of

supplementary material

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge