Online Classification Using a Voted RDA Method

Paper and Code

Oct 17, 2013

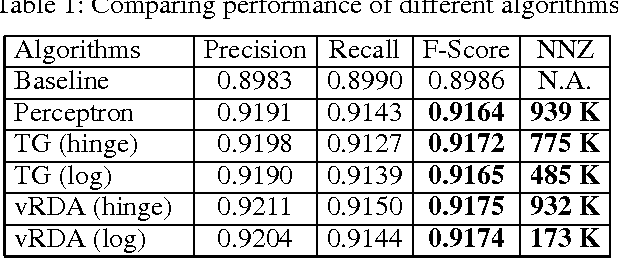

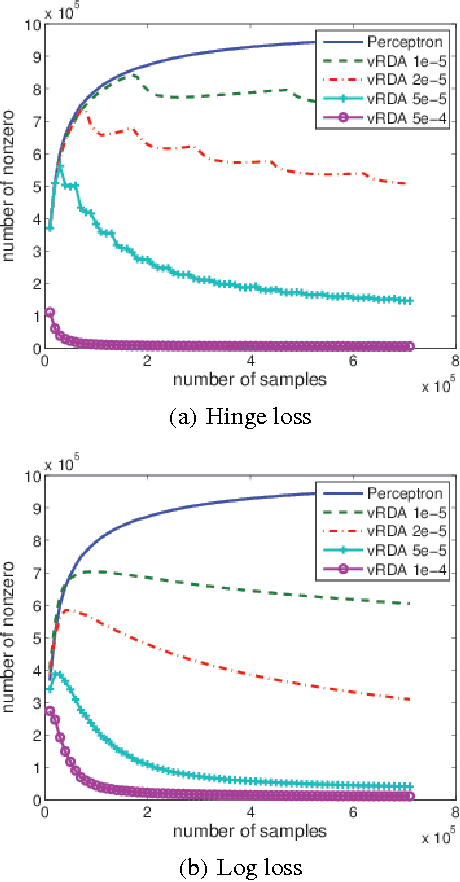

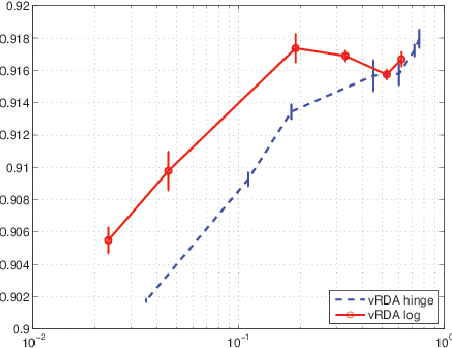

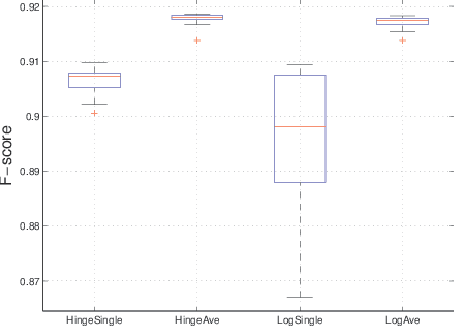

We propose a voted dual averaging method for online classification problems with explicit regularization. This method employs the update rule of the regularized dual averaging (RDA) method, but only on the subsequence of training examples where a classification error is made. We derive a bound on the number of mistakes made by this method on the training set, as well as its generalization error rate. We also introduce the concept of relative strength of regularization, and show how it affects the mistake bound and generalization performance. We experimented with the method using $\ell_1$ regularization on a large-scale natural language processing task, and obtained state-of-the-art classification performance with fairly sparse models.

* 23 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge