One-way Explainability Isn't The Message

Paper and Code

May 05, 2022

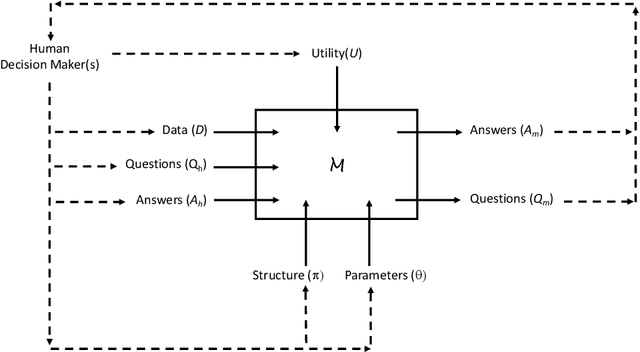

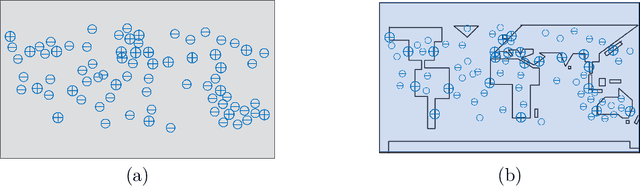

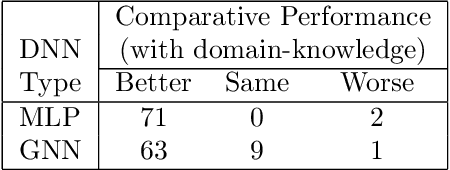

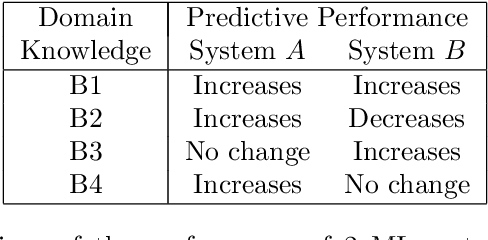

Recent engineering developments in specialised computational hardware, data-acquisition and storage technology have seen the emergence of Machine Learning (ML) as a powerful form of data analysis with widespread applicability beyond its historical roots in the design of autonomous agents. However -- possibly because of its origins in the development of agents capable of self-discovery -- relatively little attention has been paid to the interaction between people and ML. In this paper we are concerned with the use of ML in automated or semi-automated tools that assist one or more human decision makers. We argue that requirements on both human and machine in this context are significantly different to the use of ML either as part of autonomous agents for self-discovery or as part statistical data analysis. Our principal position is that the design of such human-machine systems should be driven by repeated, two-way intelligibility of information rather than one-way explainability of the ML-system's recommendations. Iterated rounds of intelligible information exchange, we think, will characterise the kinds of collaboration that will be needed to understand complex phenomena for which neither man or machine have complete answers. We propose operational principles -- we call them Intelligibility Axioms -- to guide the design of a collaborative decision-support system. The principles are concerned with: (a) what it means for information provided by the human to be intelligible to the ML system; and (b) what it means for an explanation provided by an ML system to be intelligible to a human. Using examples from the literature on the use of ML for drug-design and in medicine, we demonstrate cases where the conditions of the axioms are met. We describe some additional requirements needed for the design of a truly collaborative decision-support system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge