On the Rademacher Complexity of Linear Hypothesis Sets

Paper and Code

Jul 21, 2020

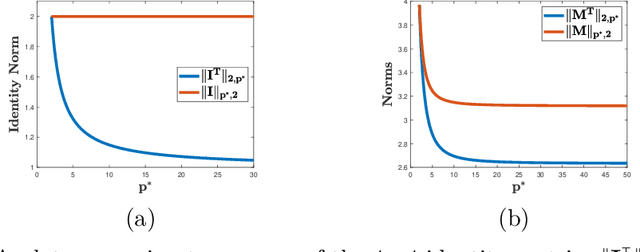

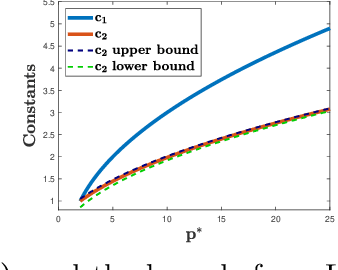

Linear predictors form a rich class of hypotheses used in a variety of learning algorithms. We present a tight analysis of the empirical Rademacher complexity of the family of linear hypothesis classes with weight vectors bounded in $\ell_p$-norm for any $p \geq 1$. This provides a tight analysis of generalization using these hypothesis sets and helps derive sharp data-dependent learning guarantees. We give both upper and lower bounds on the Rademacher complexity of these families and show that our bounds improve upon or match existing bounds, which are known only for $1 \leq p \leq 2$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge