On the Number of Linear Functions Composing Deep Neural Network: Towards a Refined Definition of Neural Networks Complexity

Paper and Code

Oct 23, 2020

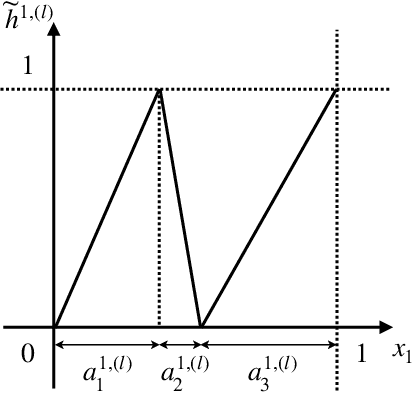

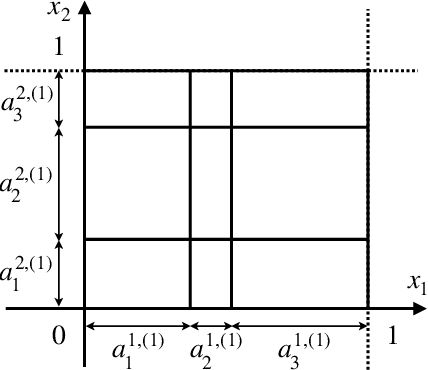

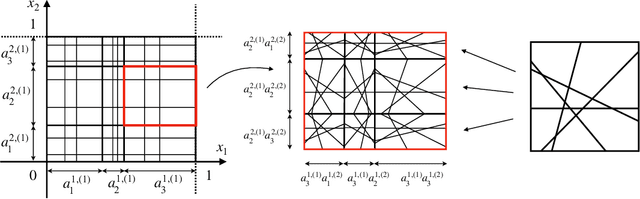

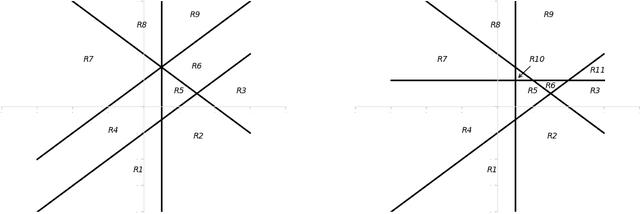

The classical approach to measure the expressive power of deep neural networks with piecewise linear activations is based on counting their maximum number of linear regions. However, when considering the two different models which are the fully connected and the permutation invariant ones, this measure is unable to distinguish them clearly in term of expressivity. To tackle this, we propose a refined definition of deep neural networks complexity. Instead of counting the number of linear regions directly, we first introduce an equivalence relation between the linear functions composing a DNN and then count those functions relatively to that equivalence relation. We continue with a study of our new complexity measure and verify that it has the good expected properties. It is able to distinguish clearly between the two models mentioned above, it is consistent with the classical measure, and it increases exponentially with depth. That last point confirms the high expressive power of deep networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge