On-the-fly Detection of User Engagement Decrease in Spontaneous Human-Robot Interaction, International Journal of Social Robotics, 2019

Paper and Code

Apr 20, 2020

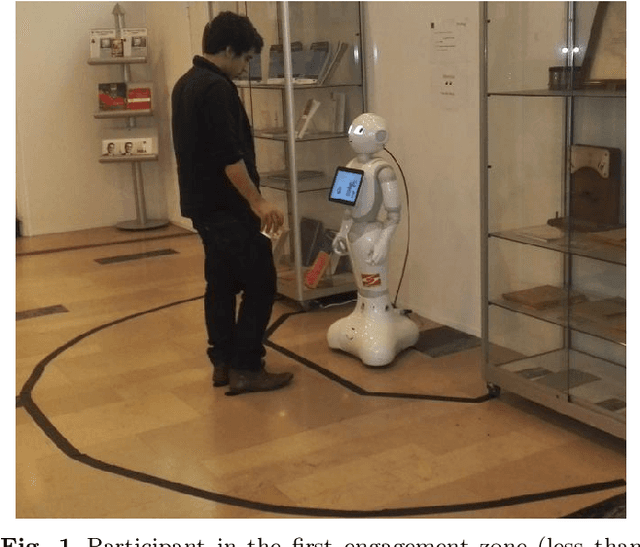

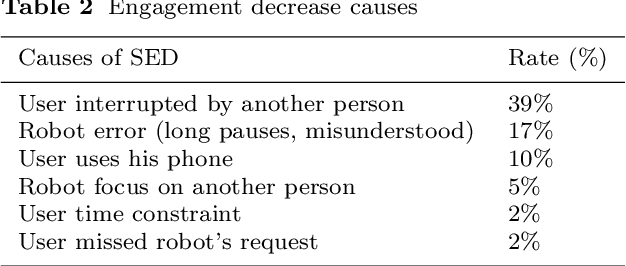

In this paper, we consider the detection of a decrease of engagement by users spontaneously interacting with a socially assistive robot in a public space. We first describe the UE-HRI dataset that collects spontaneous Human-Robot Interactions following the guidelines provided by the Affective Computing research community to collect data "in-the-wild". We then analyze the users' behaviors, focusing on proxemics, gaze, head motion, facial expressions and speech during interactions with the robot. Finally, we investigate the use of deep learning techniques (Recurrent and Deep Neural Networks) to detect user engagement decrease in realtime. The results of this work highlight, in particular, the relevance of taking into account the temporal dynamics of a user's behavior. Allowing 1 to 2 seconds as buffer delay improves the performance of taking a decision on user engagement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge