On the Convergence of Memory-Based Distributed SGD

Paper and Code

May 30, 2019

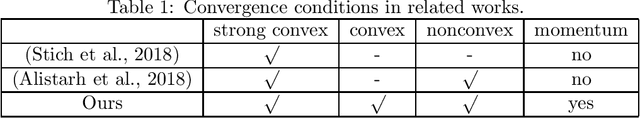

Distributed stochastic gradient descent~(DSGD) has been widely used for optimizing large-scale machine learning models, including both convex and non-convex models. With the rapid growth of model size, huge communication cost has been the bottleneck of traditional DSGD. Recently, many communication compression methods have been proposed. Memory-based distributed stochastic gradient descent~(M-DSGD) is one of the efficient methods since each worker communicates a sparse vector in each iteration so that the communication cost is small. Recent works propose the convergence rate of M-DSGD when it adopts vanilla SGD. However, there is still a lack of convergence theory for M-DSGD when it adopts momentum SGD. In this paper, we propose a universal convergence analysis for M-DSGD by introducing \emph{transformation equation}. The transformation equation describes the relation between traditional DSGD and M-DSGD so that we can transform M-DSGD to its corresponding DSGD. Hence we get the convergence rate of M-DSGD with momentum for both convex and non-convex problems. Furthermore, we combine M-DSGD and stagewise learning that the learning rate of M-DSGD in each stage is a constant and is decreased by stage, instead of iteration. Using the transformation equation, we propose the convergence rate of stagewise M-DSGD which bridges the gap between theory and practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge