On Enhancing The Performance Of Nearest Neighbour Classifiers Using Hassanat Distance Metric

Paper and Code

Jan 04, 2015

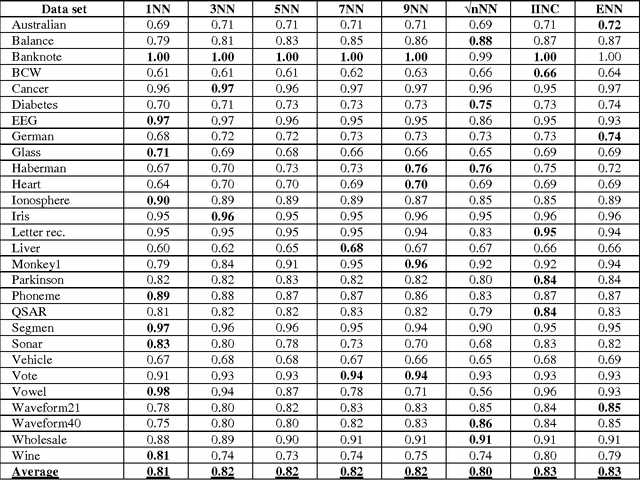

We showed in this work how the Hassanat distance metric enhances the performance of the nearest neighbour classifiers. The results demonstrate the superiority of this distance metric over the traditional and most-used distances, such as Manhattan distance and Euclidian distance. Moreover, we proved that the Hassanat distance metric is invariant to data scale, noise and outliers. Throughout this work, it is clearly notable that both ENN and IINC performed very well with the distance investigated, as their accuracy increased significantly by 3.3% and 3.1% respectively, with no significant advantage of the ENN over the IINC in terms of accuracy. Correspondingly, it can be noted from our results that there is no optimal algorithm that can solve all real-life problems perfectly; this is supported by the no-free-lunch theorem

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge