On catastrophic forgetting and mode collapse in Generative Adversarial Networks

Paper and Code

Sep 12, 2018

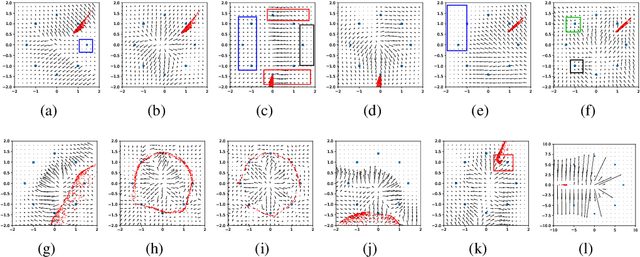

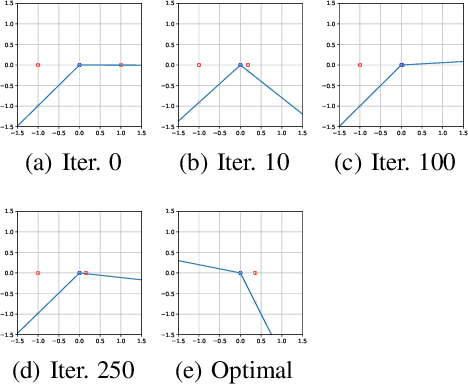

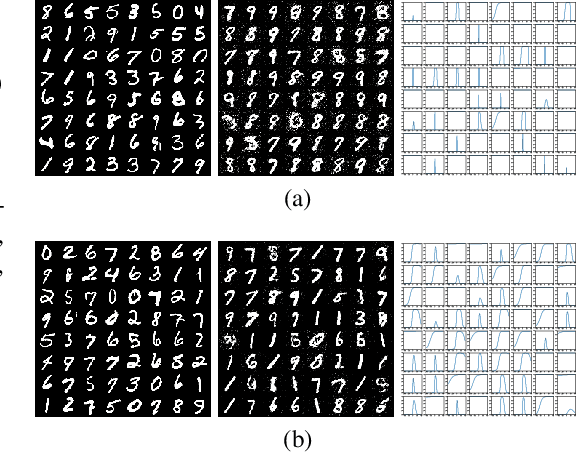

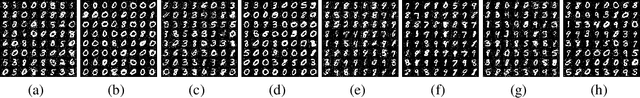

Generative Adversarial Networks (GAN) are one of the most prominent tools for learning complicated distributions. However, problems such as mode collapse and catastrophic forgetting, prevent GAN from learning the target distribution. These problems are usually studied independently from each other. In this paper, we show that both problems are present in GAN and their combined effect makes the training of GAN unstable. We also show that methods such as gradient penalties and momentum based optimizers can improve the stability of GAN by effectively preventing these problems from happening. Finally, we study a mechanism for mode collapse to occur and propagate in feedforward neural networks.

* Workshop on Theoretical Foundation and Applications of Deep

Generative Models, Stockholm, Sweden, 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge