On Bochner's and Polya's Characterizations of Positive-Definite Kernels and the Respective Random Feature Maps

Paper and Code

Oct 27, 2016

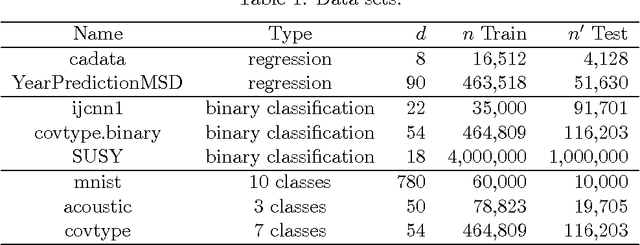

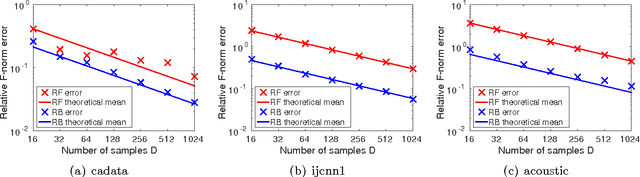

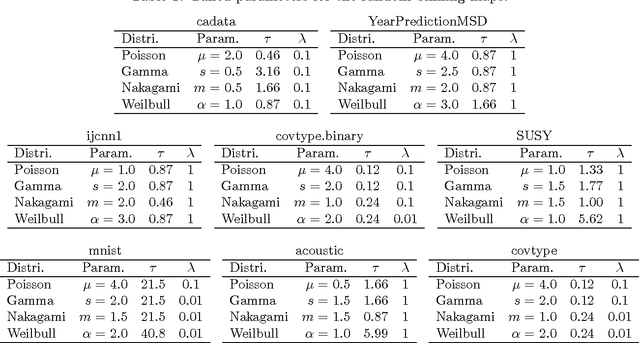

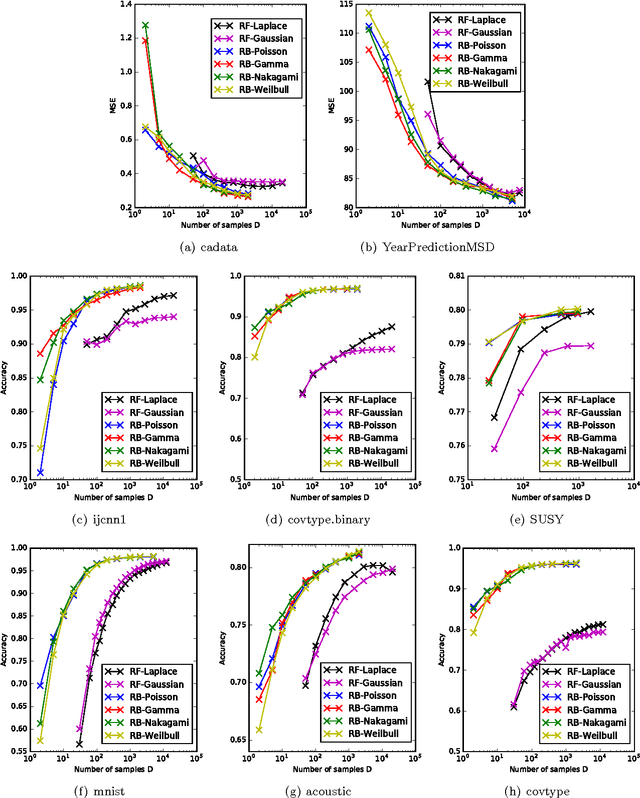

Positive-definite kernel functions are fundamental elements of kernel methods and Gaussian processes. A well-known construction of such functions comes from Bochner's characterization, which connects a positive-definite function with a probability distribution. Another construction, which appears to have attracted less attention, is Polya's criterion that characterizes a subset of these functions. In this paper, we study the latter characterization and derive a number of novel kernels little known previously. In the context of large-scale kernel machines, Rahimi and Recht (2007) proposed a random feature map (random Fourier) that approximates a kernel function, through independent sampling of the probability distribution in Bochner's characterization. The authors also suggested another feature map (random binning), which, although not explicitly stated, comes from Polya's characterization. We show that with the same number of random samples, the random binning map results in an Euclidean inner product closer to the kernel than does the random Fourier map. The superiority of the random binning map is confirmed empirically through regressions and classifications in the reproducing kernel Hilbert space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge