On Analyzing Annotation Consistency in Online Abusive Behavior Datasets

Paper and Code

Jun 24, 2020

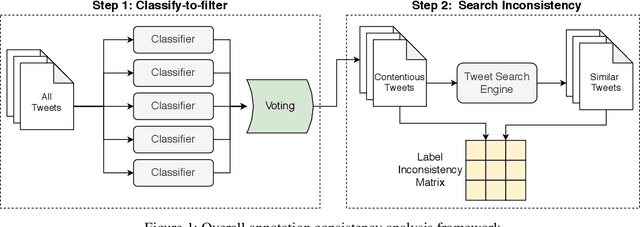

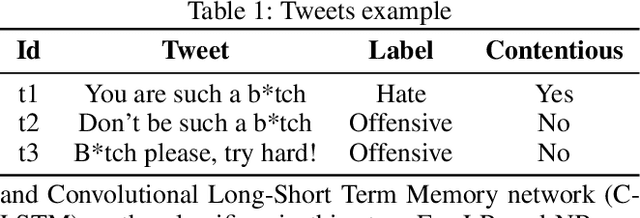

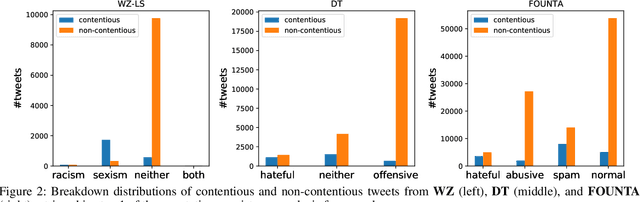

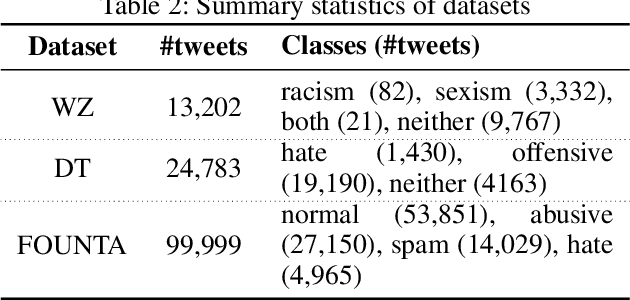

Online abusive behavior is an important issue that breaks the cohesiveness of online social communities and even raises public safety concerns in our societies. Motivated by this rising issue, researchers have proposed, collected, and annotated online abusive content datasets. These datasets play a critical role in facilitating the research on online hate speech and abusive behaviors. However, the annotation of such datasets is a difficult task; it is often contentious on what should be the true label of a given text as the semantic difference of the labels may be blurred (e.g., abusive and hate) and often subjective. In this study, we proposed an analytical framework to study the annotation consistency in online hate and abusive content datasets. We applied our proposed framework to evaluate the consistency of the annotation in three popular datasets that are widely used in online hate speech and abusive behavior studies. We found that there is still a substantial amount of annotation inconsistency in the existing datasets, particularly when the labels are semantically similar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge