OL4EL: Online Learning for Edge-cloud Collaborative Learning on Heterogeneous Edges with Resource Constraints

Paper and Code

Apr 23, 2020

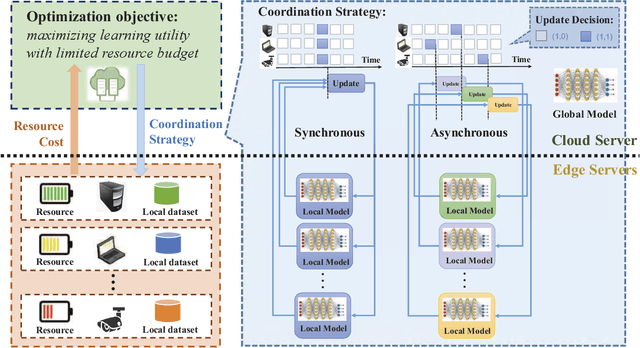

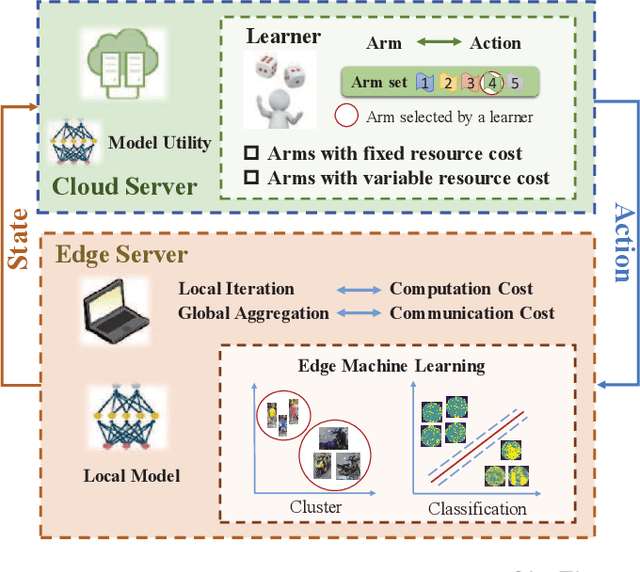

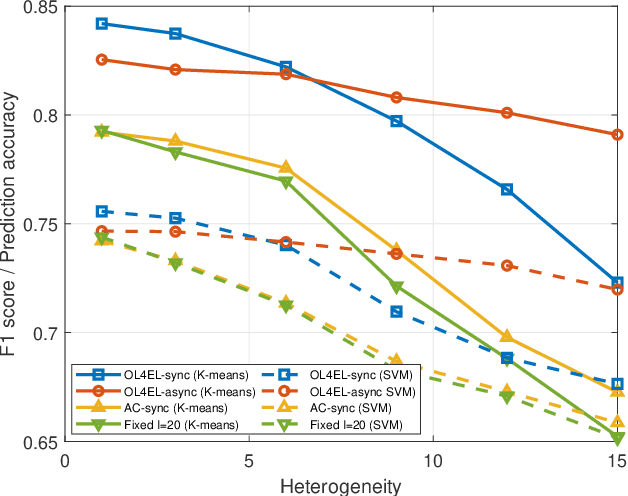

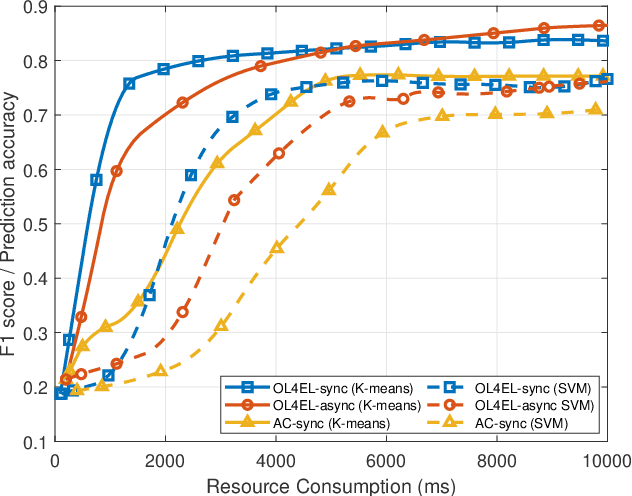

Distributed machine learning (ML) at network edge is a promising paradigm that can preserve both network bandwidth and privacy of data providers. However, heterogeneous and limited computation and communication resources on edge servers (or edges) pose great challenges on distributed ML and formulate a new paradigm of Edge Learning (i.e. edge-cloud collaborative machine learning). In this article, we propose a novel framework of 'learning to learn' for effective Edge Learning (EL) on heterogeneous edges with resource constraints. We first model the dynamic determination of collaboration strategy (i.e. the allocation of local iterations at edge servers and global aggregations on the Cloud during collaborative learning process) as an online optimization problem to achieve the tradeoff between the performance of EL and the resource consumption of edge servers. Then, we propose an Online Learning for EL (OL4EL) framework based on the budget-limited multi-armed bandit model. OL4EL supports both synchronous and asynchronous learning patterns, and can be used for both supervised and unsupervised learning tasks. To evaluate the performance of OL4EL, we conducted both real-world testbed experiments and extensive simulations based on docker containers, where both Support Vector Machine and K-means were considered as use cases. Experimental results demonstrate that OL4EL significantly outperforms state-of-the-art EL and other collaborative ML approaches in terms of the trade-off between learning performance and resource consumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge