Object Localization and Motion Transfer learning with Capsules

Paper and Code

May 20, 2018

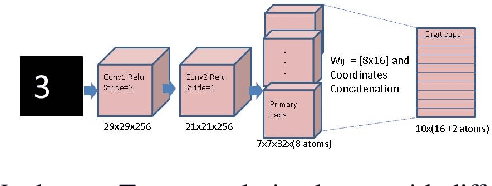

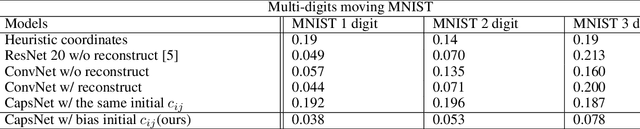

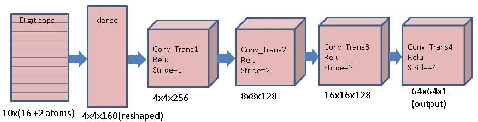

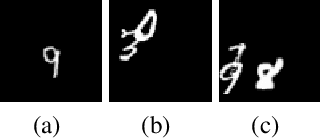

Inspired by CapsNet's routing-by-agreement mechanism, with its ability to learn object properties, and by center-of-mass calculations from physics, we propose a CapsNet architecture with object coordinate atoms and an LSTM network for evaluation. The first is based on CapsNet but uses a new routing algorithm to find the objects' approximate positions in the image coordinate system, and the second is a parameterized affine transformation network that can predict future positions from past positions by learning the translation transformation from 2D object coordinates generated from the first network. We demonstrate the learned translation transformation is transferable to another dataset without the need to train the transformation network again. Only the CapsNet needs training on the new dataset. As a result, our work shows that object recognition and motion prediction can be separated, and that motion prediction can be transferred to another dataset with different object types.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge