Object Detection with Transformers: A Review

Paper and Code

Jun 27, 2023

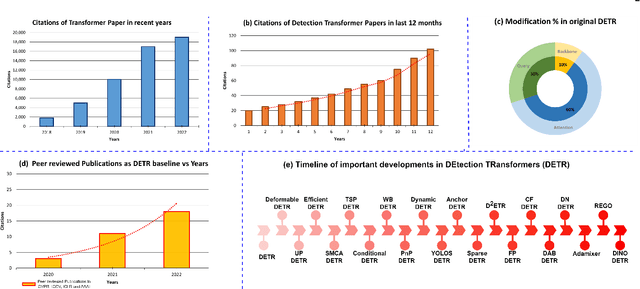

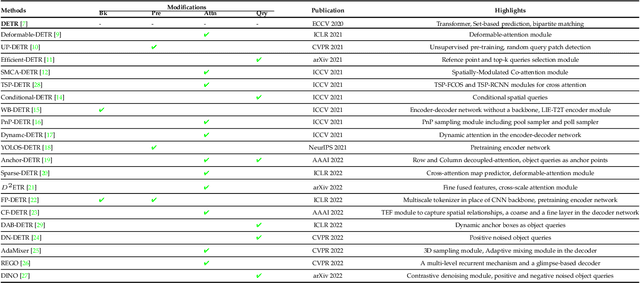

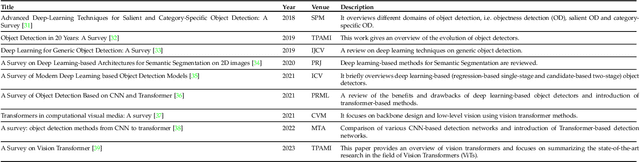

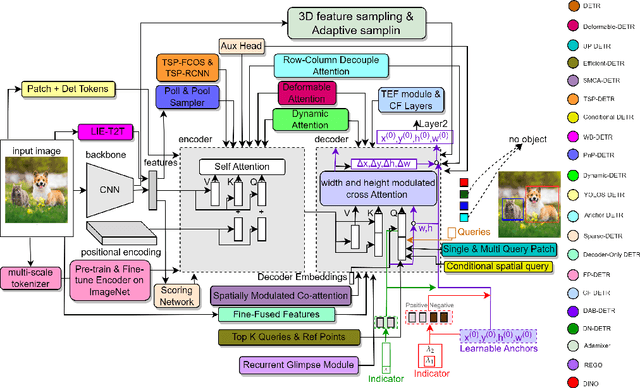

Astounding performance of Transformers in natural language processing (NLP) has delighted researchers to explore their utilization in computer vision tasks. Like other computer vision tasks, DEtection TRansformer (DETR) introduces transformers for object detection tasks by considering the detection as a set prediction problem without needing proposal generation and post-processing steps. It is a state-of-the-art (SOTA) method for object detection, particularly in scenarios where the number of objects in an image is relatively small. Despite the success of DETR, it suffers from slow training convergence and performance drops for small objects. Therefore, many improvements are proposed to address these issues, leading to immense refinement in DETR. Since 2020, transformer-based object detection has attracted increasing interest and demonstrated impressive performance. Although numerous surveys have been conducted on transformers in vision in general, a review regarding advancements made in 2D object detection using transformers is still missing. This paper gives a detailed review of twenty-one papers about recent developments in DETR. We begin with the basic modules of Transformers, such as self-attention, object queries and input features encoding. Then, we cover the latest advancements in DETR, including backbone modification, query design and attention refinement. We also compare all detection transformers in terms of performance and network design. We hope this study will increase the researcher's interest in solving existing challenges towards applying transformers in the object detection domain. Researchers can follow newer improvements in detection transformers on this webpage available at: https://github.com/mindgarage-shan/trans_object_detection_survey

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge