Noisy Tensor Completion via Low-rank Tensor Ring

Paper and Code

Mar 14, 2022

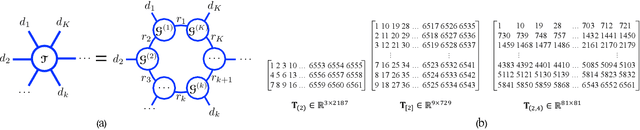

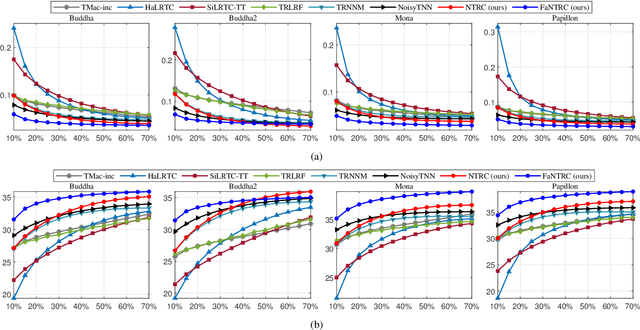

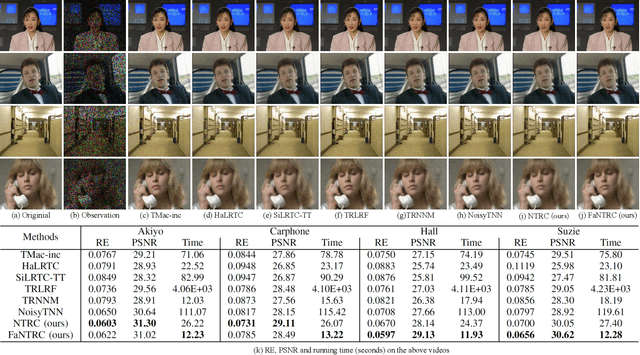

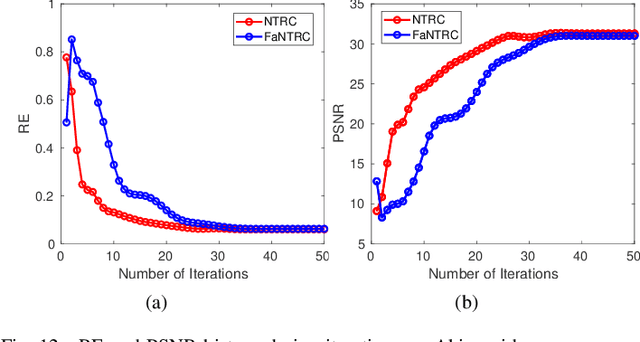

Tensor completion is a fundamental tool for incomplete data analysis, where the goal is to predict missing entries from partial observations. However, existing methods often make the explicit or implicit assumption that the observed entries are noise-free to provide a theoretical guarantee of exact recovery of missing entries, which is quite restrictive in practice. To remedy such drawbacks, this paper proposes a novel noisy tensor completion model, which complements the incompetence of existing works in handling the degeneration of high-order and noisy observations. Specifically, the tensor ring nuclear norm (TRNN) and least-squares estimator are adopted to regularize the underlying tensor and the observed entries, respectively. In addition, a non-asymptotic upper bound of estimation error is provided to depict the statistical performance of the proposed estimator. Two efficient algorithms are developed to solve the optimization problem with convergence guarantee, one of which is specially tailored to handle large-scale tensors by replacing the minimization of TRNN of the original tensor equivalently with that of a much smaller one in a heterogeneous tensor decomposition framework. Experimental results on both synthetic and real-world data demonstrate the effectiveness and efficiency of the proposed model in recovering noisy incomplete tensor data compared with state-of-the-art tensor completion models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge