Noise2Stack: Improving Image Restoration by Learning from Volumetric Data

Paper and Code

Nov 10, 2020

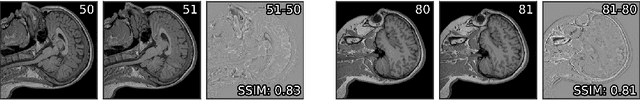

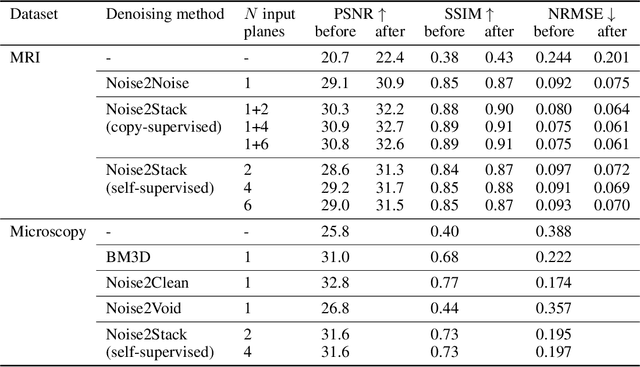

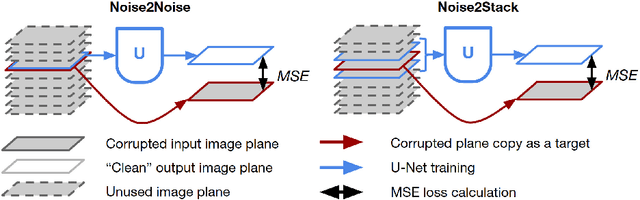

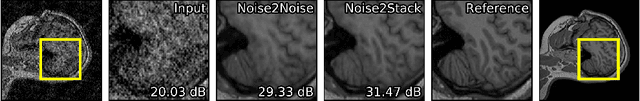

Biomedical images are noisy. The imaging equipment itself has physical limitations, and the consequent experimental trade-offs between signal-to-noise ratio, acquisition speed, and imaging depth exacerbate the problem. Denoising is, therefore, an essential part of any image processing pipeline, and convolutional neural networks are currently the method of choice for this task. One popular approach, Noise2Noise, does not require clean ground truth, and instead, uses a second noisy copy as a training target. Self-supervised methods, like Noise2Self and Noise2Void, relax data requirements by learning the signal without an explicit target but are limited by the lack of information in a single image. Here, we introduce Noise2Stack, an extension of the Noise2Noise method to image stacks that takes advantage of a shared signal between spatially neighboring planes. Our experiments on magnetic resonance brain scans and newly acquired multiplane microscopy data show that learning only from image neighbors in a stack is sufficient to outperform Noise2Noise and Noise2Void and close the gap to supervised denoising methods. Our findings point towards low-cost, high-reward improvement in the denoising pipeline of multiplane biomedical images. As a part of this work, we release a microscopy dataset to establish a benchmark for the multiplane image denoising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge