Noise-response Analysis for Rapid Detection of Backdoors in Deep Neural Networks

Paper and Code

Jul 31, 2020

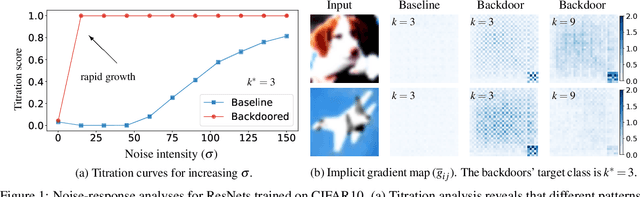

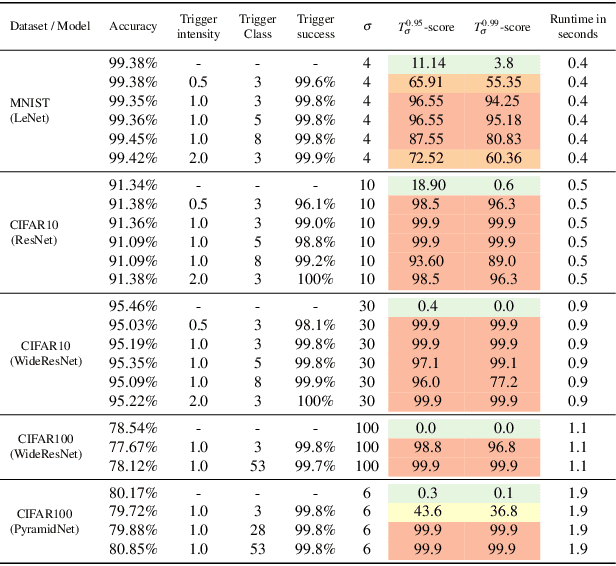

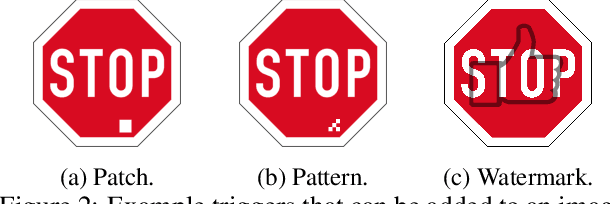

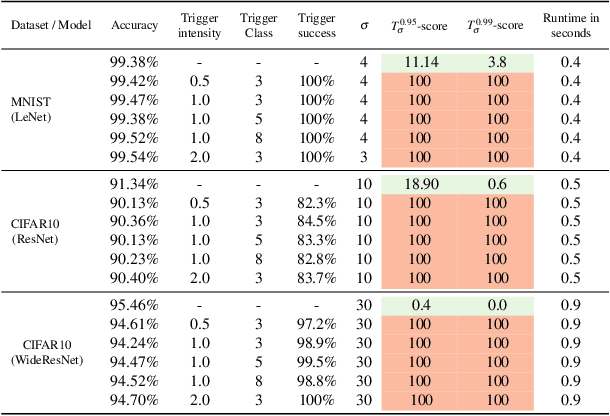

The pervasiveness of deep neural networks (DNNs) in technology, matched with the ubiquity of cloud-based training and transfer learning, is giving rise to a new frontier for cybersecurity whereby `structural malware' is manifest as compromised weights and activation pathways for unsecure DNNs. In particular, DNNs can be designed to have backdoors in which an adversary can easily and reliably fool a classifier by adding to any image a pattern of pixels called a trigger. Since DNNs are black-box algorithms, it is generally difficult to detect a backdoor or any other type of structural malware. To efficiently provide a reliable signal for the absence/presence of backdoors, we propose a rapid feature-generation step in which we study how DNNs respond to noise-infused images with varying noise intensity. This results in titration curves, which are a type of `fingerprinting' for DNNs. We find that DNNs with backdoors are more sensitive to input noise and respond in a characteristic way that reveals the backdoor and where it leads (i.e,. its target). Our empirical results demonstrate that we can accurately detect a backdoor with high confidence orders-of-magnitude faster than existing approaches (i.e., seconds versus hours). Our method also yields a titration-score that can automate the detection of compromised DNNs, whereas existing backdoor-detection strategies are not automated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge