No-regret incentive-compatible online learning under exact truthfulness with non-myopic experts

Paper and Code

Feb 17, 2025

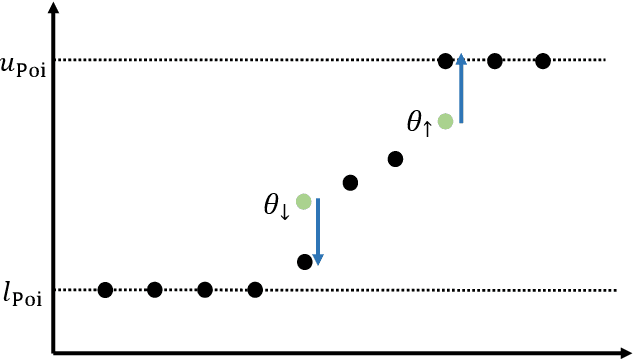

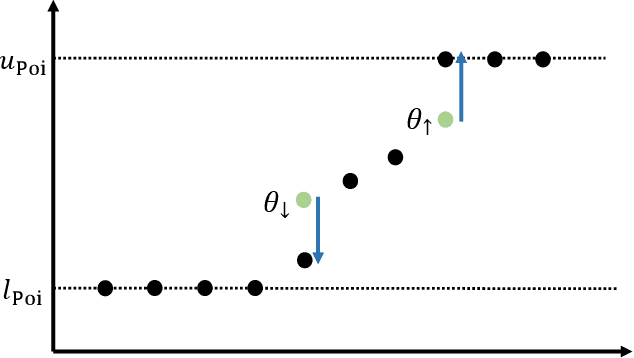

We study an online forecasting setting in which, over $T$ rounds, $N$ strategic experts each report a forecast to a mechanism, the mechanism selects one forecast, and then the outcome is revealed. In any given round, each expert has a belief about the outcome, but the expert wishes to select its report so as to maximize the total number of times it is selected. The goal of the mechanism is to obtain low belief regret: the difference between its cumulative loss (based on its selected forecasts) and the cumulative loss of the best expert in hindsight (as measured by the experts' beliefs). We consider exactly truthful mechanisms for non-myopic experts, meaning that truthfully reporting its belief strictly maximizes the expert's subjective probability of being selected in any future round. Even in the full-information setting, it is an open problem to obtain the first no-regret exactly truthful mechanism in this setting. We develop the first no-regret mechanism for this setting via an online extension of the Independent-Event Lotteries Forecasting Competition Mechanism (I-ELF). By viewing this online I-ELF as a novel instance of Follow the Perturbed Leader (FPL) with noise based on random walks with loss-dependent perturbations, we obtain $\tilde{O}(\sqrt{T N})$ regret. Our results are fueled by new tail bounds for Poisson binomial random variables that we develop. We extend our results to the bandit setting, where we give an exactly truthful mechanism obtaining $\tilde{O}(T^{2/3} N^{1/3})$ regret; this is the first no-regret result even among approximately truthful mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge