NMR: Neural Manifold Representation for Autonomous Driving

Paper and Code

May 11, 2022

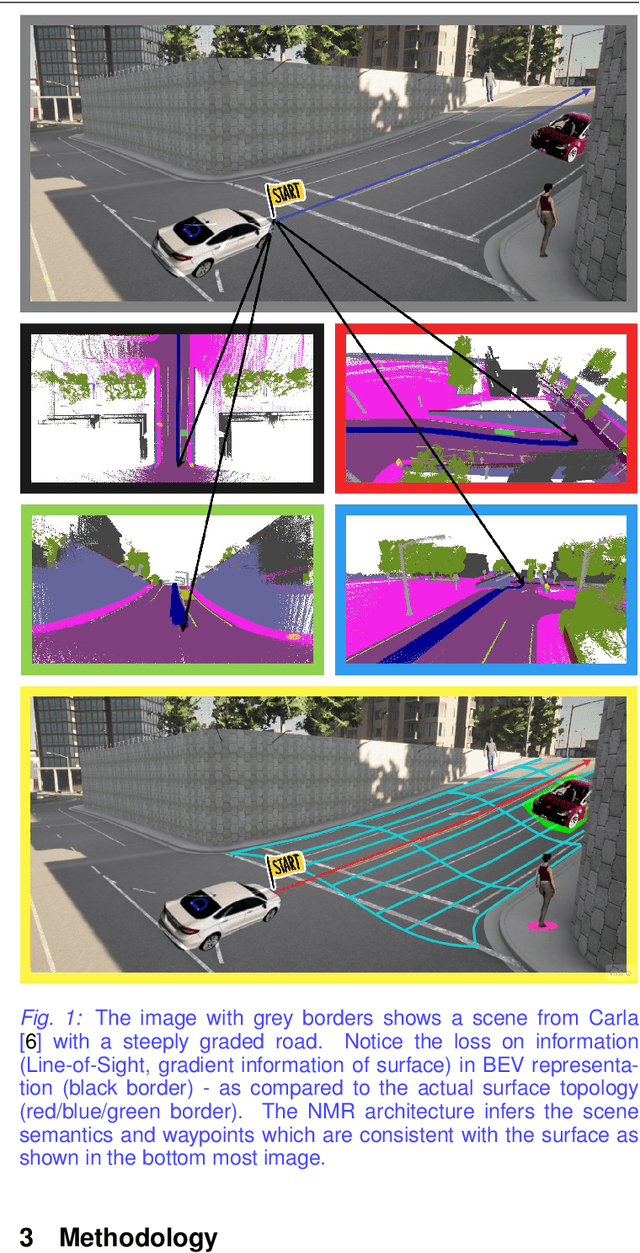

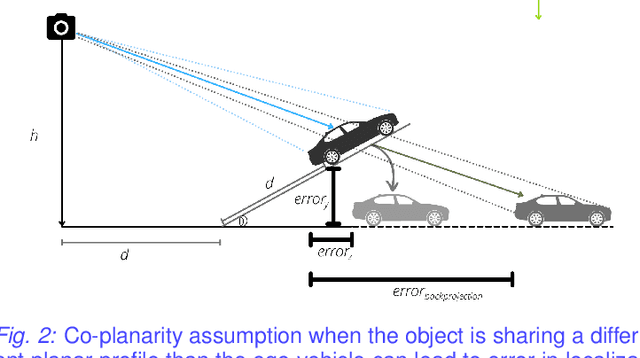

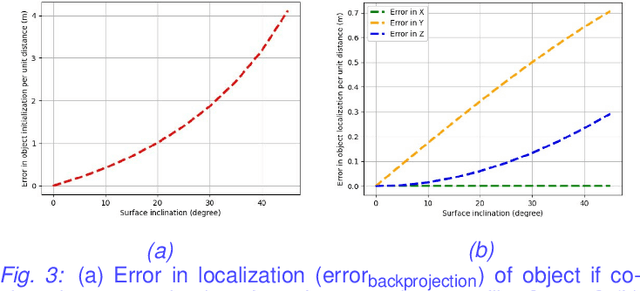

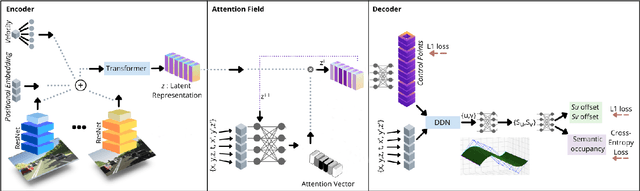

Autonomous driving requires efficient reasoning about the Spatio-temporal nature of the semantics of the scene. Recent approaches have successfully amalgamated the traditional modular architecture of an autonomous driving stack comprising perception, prediction, and planning in an end-to-end trainable system. Such a system calls for a shared latent space embedding with interpretable intermediate trainable projected representation. One such successfully deployed representation is the Bird's-Eye View(BEV) representation of the scene in ego-frame. However, a fundamental assumption for an undistorted BEV is the local coplanarity of the world around the ego-vehicle. This assumption is highly restrictive, as roads, in general, do have gradients. The resulting distortions make path planning inefficient and incorrect. To overcome this limitation, we propose Neural Manifold Representation (NMR), a representation for the task of autonomous driving that learns to infer semantics and predict way-points on a manifold over a finite horizon, centered on the ego-vehicle. We do this using an iterative attention mechanism applied on a latent high dimensional embedding of surround monocular images and partial ego-vehicle state. This representation helps generate motion and behavior plans consistent with and cognizant of the surface geometry. We propose a sampling algorithm based on edge-adaptive coverage loss of BEV occupancy grid and associated guidance flow field to generate the surface manifold while incurring minimal computational overhead. We aim to test the efficacy of our approach on CARLA and SYNTHIA-SF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge