New Insights into Multi-Calibration

Paper and Code

Jan 21, 2023

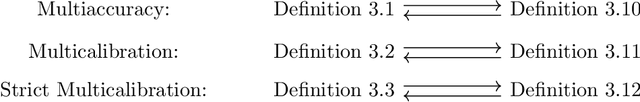

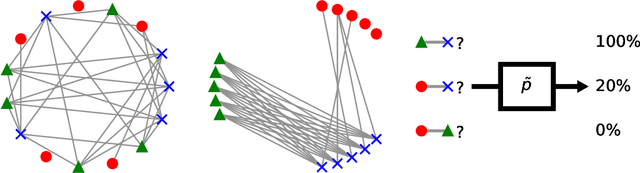

We identify a novel connection between the recent literature on multi-group fairness for prediction algorithms and well-established notions of graph regularity from extremal graph theory. We frame our investigation using new, statistical distance-based variants of multi-calibration that are closely related to the concept of outcome indistinguishability. Adopting this perspective leads us naturally not only to our graph theoretic results, but also to new multi-calibration algorithms with improved complexity in certain parameter regimes, and to a generalization of a state-of-the-art result on omniprediction. Along the way, we also unify several prior algorithms for achieving multi-group fairness, as well as their analyses, through the lens of no-regret learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge