Neuro-Optimization: Learning Objective Functions Using Neural Networks

Paper and Code

May 24, 2019

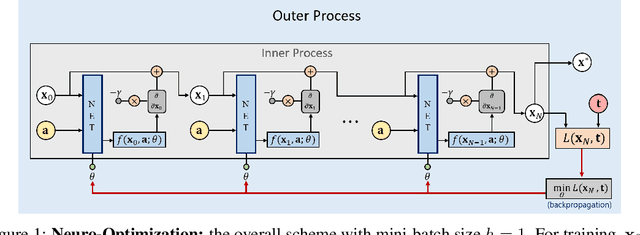

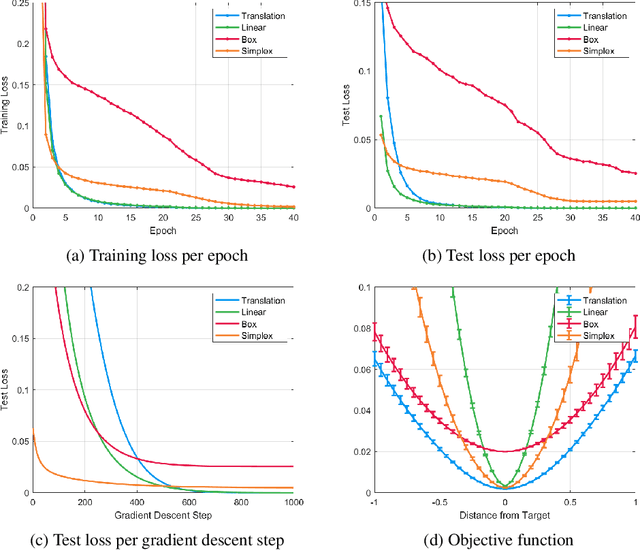

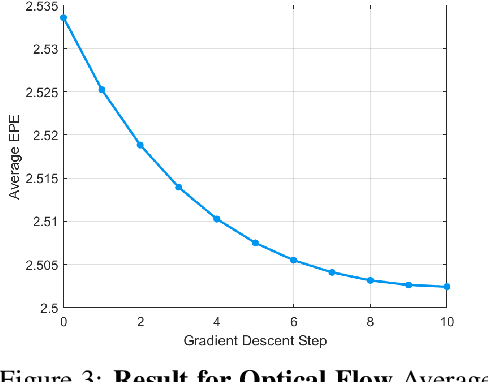

Mathematical optimization is widely used in various research fields. With a carefully-designed objective function, mathematical optimization can be quite helpful in solving many problems. However, objective functions are usually hand-crafted and designing a good one can be quite challenging. In this paper, we propose a novel framework to learn the objective function based on a neural net-work. The basic idea is to consider the neural network as an objective function, and the input as an optimization variable. For the learning of objective function from the training data, two processes are conducted: In the inner process, the optimization variable (the input of the network) are optimized to minimize the objective function (the network output), while fixing the network weights. In the outer process, on the other hand, the weights are optimized based on how close the final solution of the inner process is to the desired solution. After learning the objective function, the solution for the test set is obtained in the same manner of the inner process. The potential and applicability of our approach are demonstrated by the experiments on toy examples and a computer vision task, optical flow.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge