Neural Networks for Scalar Input and Functional Output

Paper and Code

Aug 10, 2022

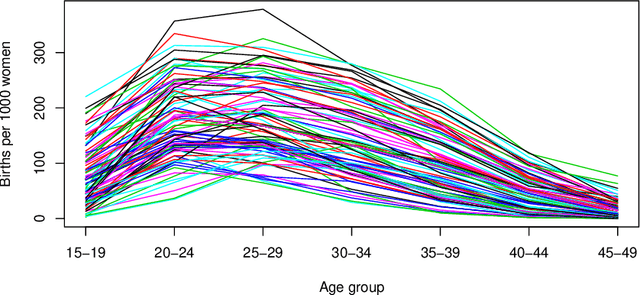

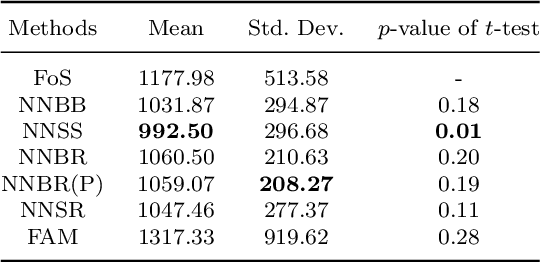

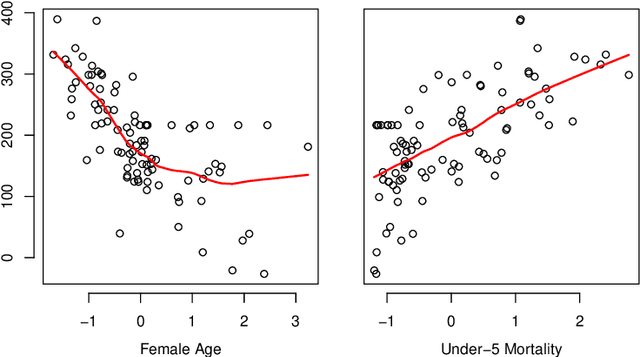

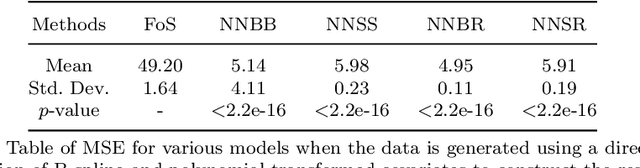

The regression of a functional response on a set of scalar predictors can be a challenging task, especially if there is a large number of predictors, these predictors have interaction effects, or the relationship between those predictors and the response is nonlinear. In this work, we propose a solution to this problem: a feed-forward neural network (NN) designed to predict a functional response using scalar inputs. First, we transform the functional response to a finite-dimension representation and then we construct a NN that outputs this representation. We proposed different objective functions to train the NN. The proposed models are suited for both regularly and irregularly spaced data and also provide multiple ways to apply a roughness penalty to control the smoothness of the predicted curve. The difficulty in implementing both those features lies in the definition of objective functions that can be back-propagated. In our experiments, we demonstrate that our model outperforms the conventional function-on-scalar regression model in multiple scenarios while computationally scaling better with the dimension of the predictors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge