Neural Entity Linking: A Survey of Models based on Deep Learning

Paper and Code

May 31, 2020

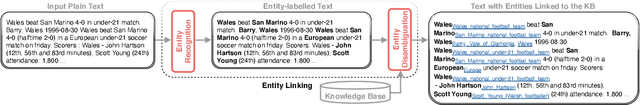

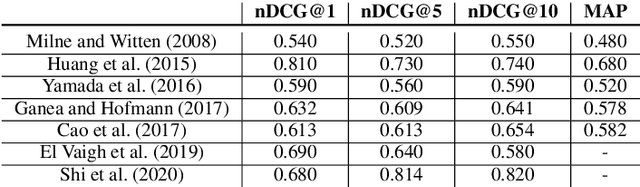

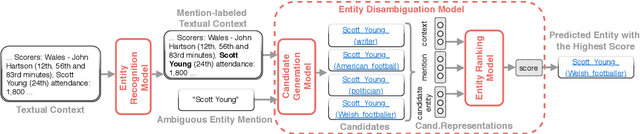

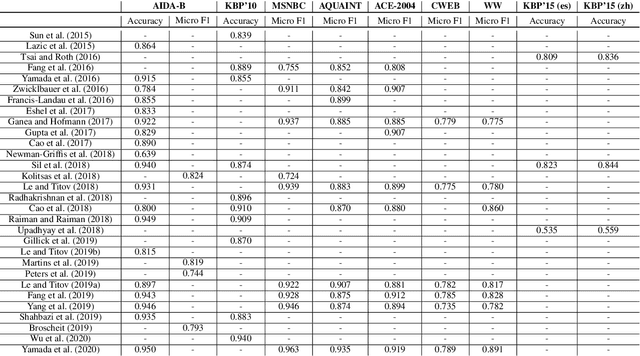

In this survey, we provide a comprehensive description of recent neural entity linking (EL) systems. We distill their generic architecture that includes candidate generation, entity ranking, and unlinkable mention prediction components. For each of them, we summarize the prominent methods and models, including approaches to mention encoding based on the self-attention architecture. Since many EL models take advantage of entity embeddings to improve their generalization capabilities, we provide an overview of the widely-used entity embedding techniques. We group the variety of EL approaches by several common research directions: joint entity recognition and linking, models for global EL, domain-independent techniques including zero-shot and distant supervision methods, and cross-lingual approaches. We also discuss the novel application of EL for enhancing word representation models like BERT. We systemize the critical design features of EL systems and provide their reported evaluation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge