Neural Class Expression Synthesis

Paper and Code

Nov 18, 2021

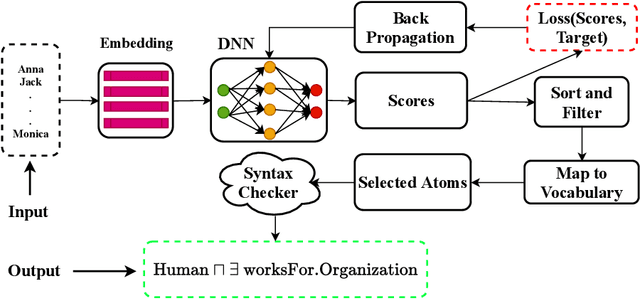

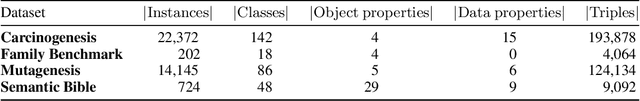

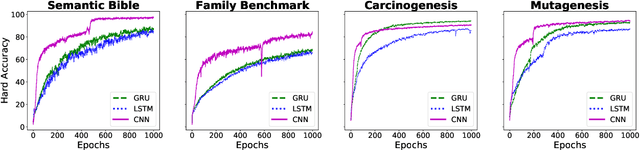

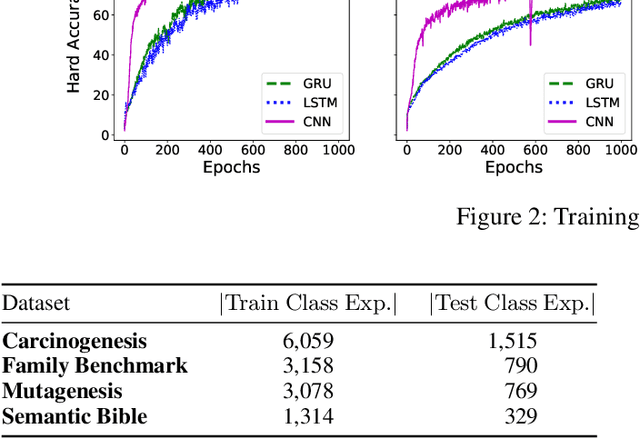

Class expression learning is a branch of explainable supervised machine learning of increasing importance. Most existing approaches for class expression learning in description logics are search algorithms or hard-rule-based. In particular, approaches based on refinement operators suffer from scalability issues as they rely on heuristic functions to explore a large search space for each learning problem. We propose a new family of approaches, which we dub synthesis approaches. Instances of this family compute class expressions directly from the examples provided. Consequently, they are not subject to the runtime limitations of search-based approaches nor the lack of flexibility of hard-rule-based approaches. We study three instances of this novel family of approaches that use lightweight neural network architectures to synthesize class expressions from sets of positive examples. The results of their evaluation on four benchmark datasets suggest that they can effectively synthesize high-quality class expressions with respect to the input examples in under a second on average. Moreover, a comparison with the state-of-the-art approaches CELOE and ELTL suggests that we achieve significantly better F-measures on large ontologies. For reproducibility purposes, we provide our implementation as well as pre-trained models in the public GitHub repository at https://github.com/ConceptLengthLearner/NCES

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge