Network Representation Learning for Link Prediction: Are we improving upon simple heuristics?

Paper and Code

Mar 06, 2020

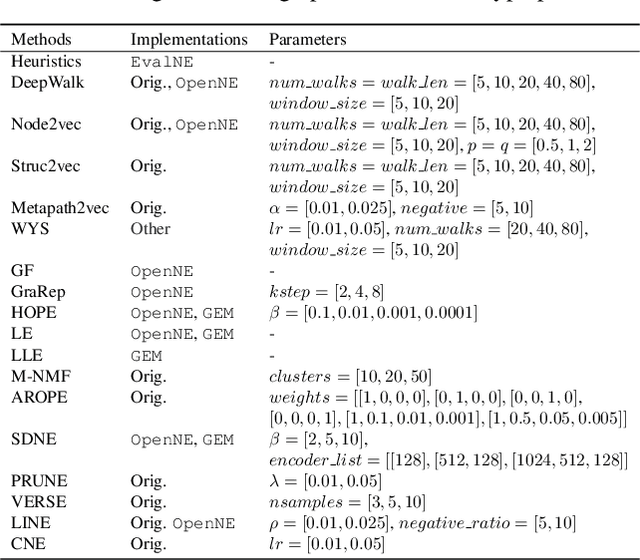

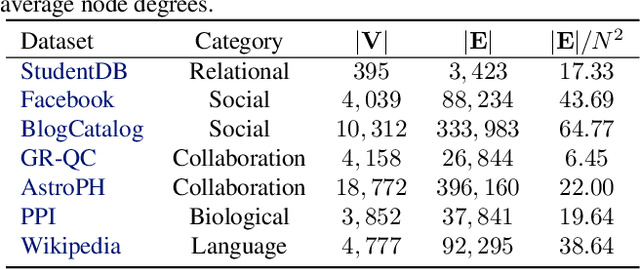

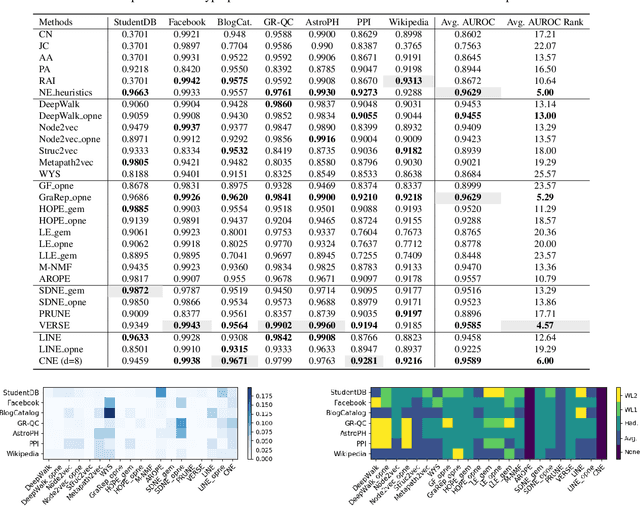

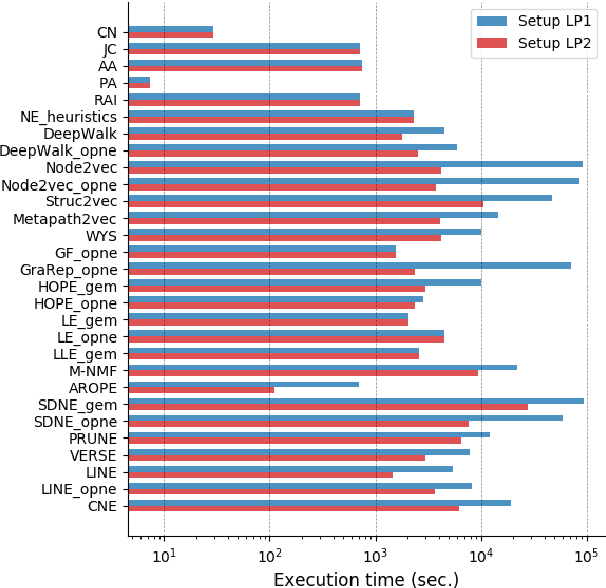

Network representation learning has become an active research area in recent years with many new methods showcasing their performance on downstream prediction tasks such as Link Prediction. Despite the efforts of the community to ensure reproducibility of research by providing method implementations, important issues remain. The complexity of the evaluation pipelines and abundance of design choices have led to difficulties in quantifying the progress in the field and identifying the state-of-the-art. In this work, we analyse 17 network embedding methods on 7 real-world datasets and find, using a consistent evaluation pipeline, only thin progress over the recent years. Also, many embedding methods are outperformed by simple heuristics. Finally, we discuss how standardized evaluation tools can repair this situation and boost progress in this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge