Multitask Learning for Fundamental Frequency Estimation in Music

Paper and Code

Sep 02, 2018

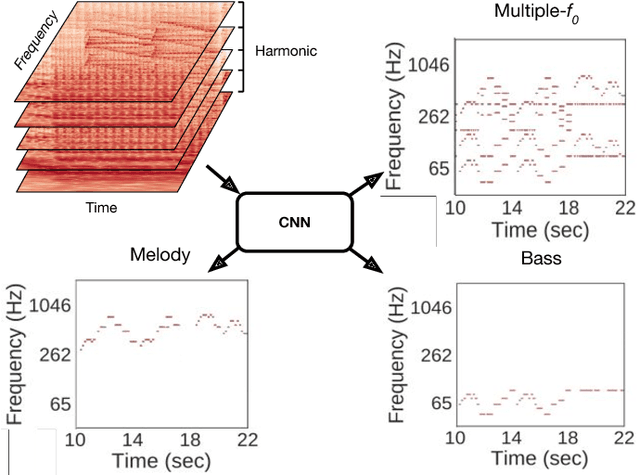

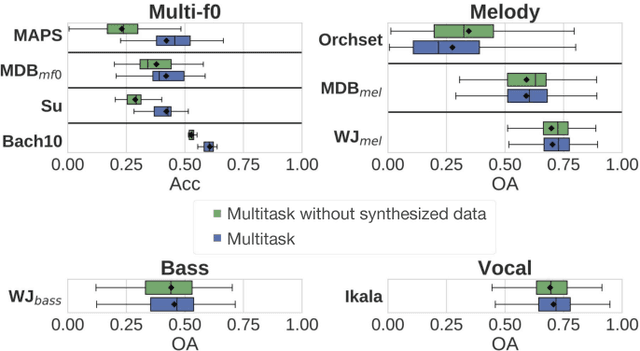

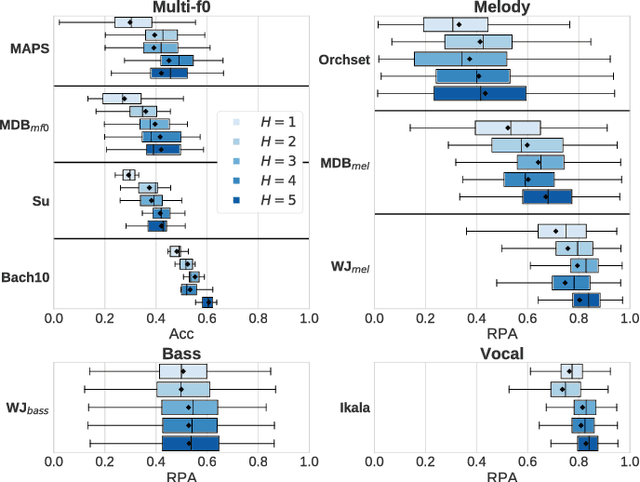

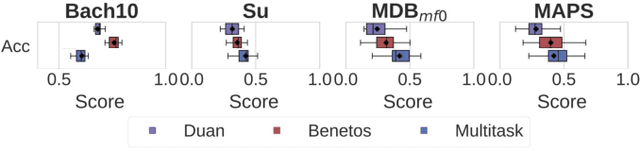

Fundamental frequency (f0) estimation from polyphonic music includes the tasks of multiple-f0, melody, vocal, and bass line estimation. Historically these problems have been approached separately, and only recently, using learning-based approaches. We present a multitask deep learning architecture that jointly estimates outputs for various tasks including multiple-f0, melody, vocal and bass line estimation, and is trained using a large, semi-automatically annotated dataset. We show that the multitask model outperforms its single-task counterparts, and explore the effect of various design decisions in our approach, and show that it performs better or at least competitively when compared against strong baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge