Multimodal Classification for Analysing Social Media

Paper and Code

Aug 07, 2017

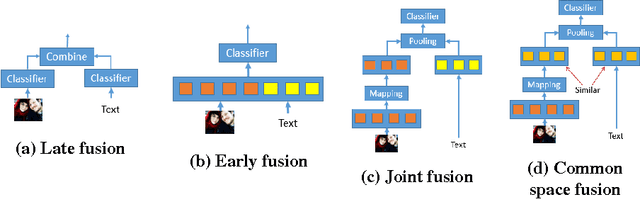

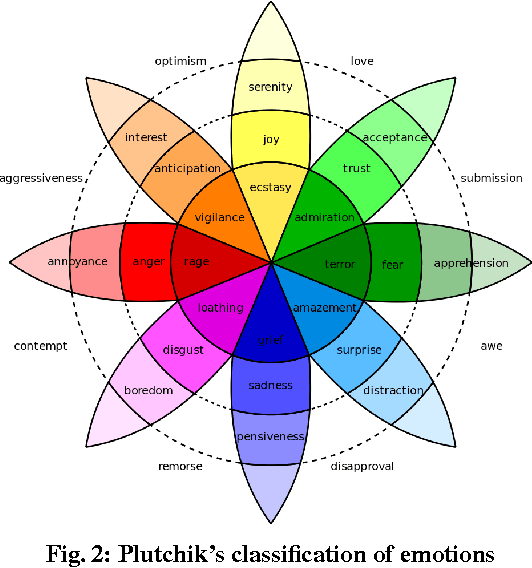

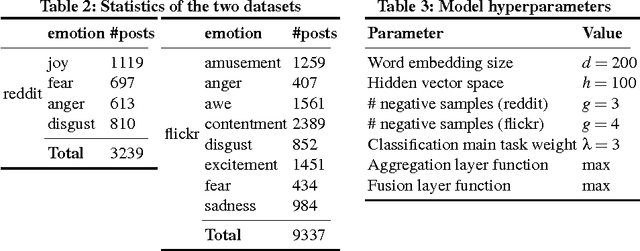

Classification of social media data is an important approach in understanding user behavior on the Web. Although information on social media can be of different modalities such as texts, images, audio or videos, traditional approaches in classification usually leverage only one prominent modality. Techniques that are able to leverage multiple modalities are often complex and susceptible to the absence of some modalities. In this paper, we present simple models that combine information from different modalities to classify social media content and are able to handle the above problems with existing techniques. Our models combine information from different modalities using a pooling layer and an auxiliary learning task is used to learn a common feature space. We demonstrate the performance of our models and their robustness to the missing of some modalities in the emotion classification domain. Our approaches, although being simple, can not only achieve significantly higher accuracies than traditional fusion approaches but also have comparable results when only one modality is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge