Multimodal Active Measurement for Human Mesh Recovery in Close Proximity

Paper and Code

Oct 12, 2023

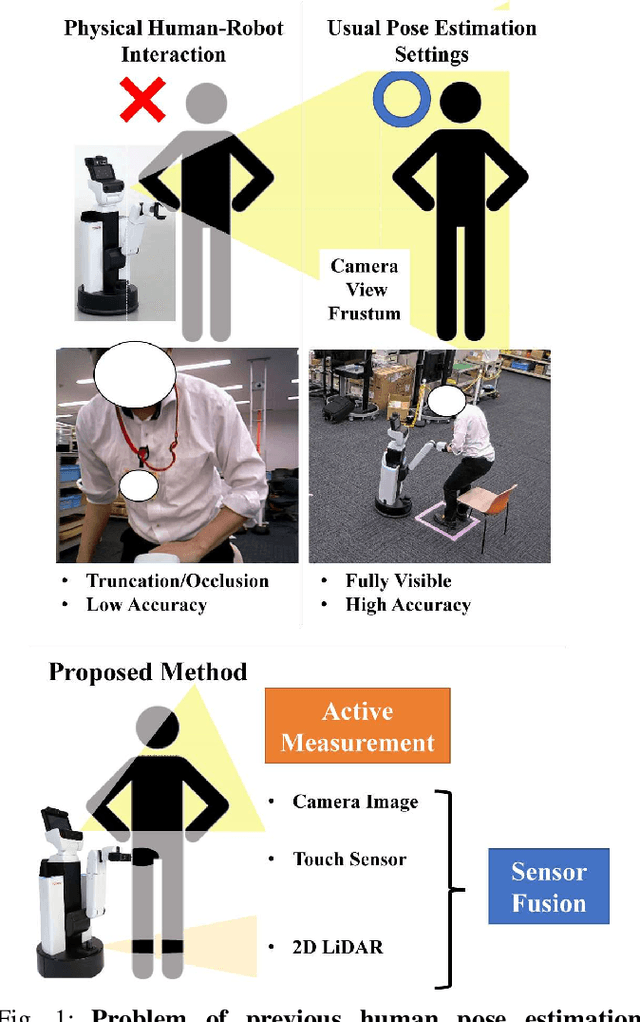

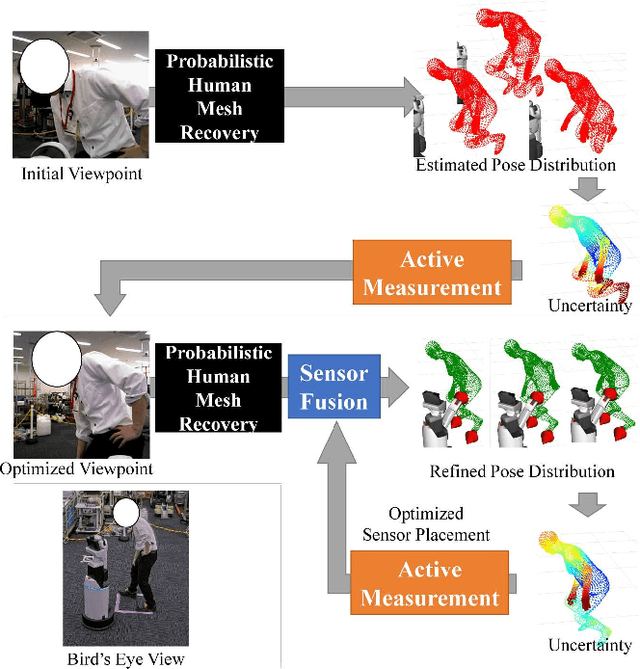

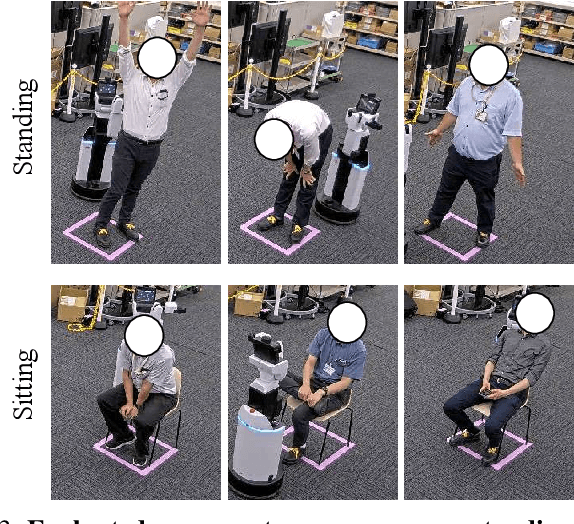

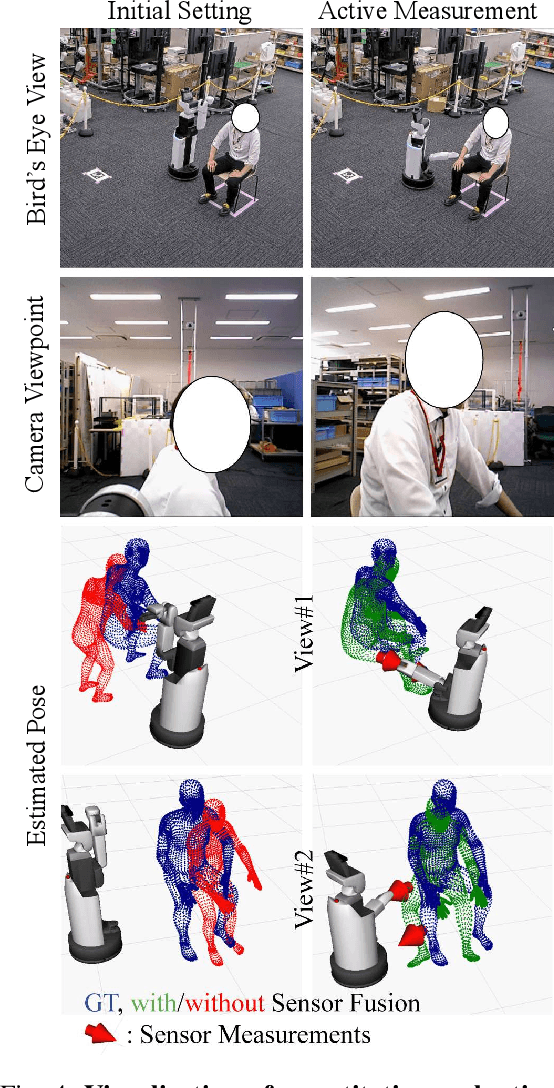

For safe and sophisticated physical human-robot interactions (pHRI), a robot needs to estimate the accurate body pose or mesh of the target person. However, in these pHRI scenarios, the robot cannot fully observe the target person's body with equipped cameras because the target person is usually close to the robot. This leads to severe truncation and occlusions, and results in poor accuracy of human pose estimation. For better accuracy of human pose estimation or mesh recovery on this limited information from cameras, we propose an active measurement and sensor fusion framework of the equipped cameras and other sensors such as touch sensors and 2D LiDAR. These touch and LiDAR sensing are obtained attendantly through pHRI without additional costs. These sensor measurements are sparse but reliable and informative cues for human mesh recovery. In our active measurement process, camera viewpoints and sensor placements are optimized based on the uncertainty of the estimated pose, which is closely related to the truncated or occluded areas. In our sensor fusion process, we fuse the sensor measurements to the camera-based estimated pose by minimizing the distance between the estimated mesh and measured positions. Our method is agnostic to robot configurations. Experiments were conducted using the Toyota Human Support Robot, which has a camera, 2D LiDAR, and a touch sensor on the robot arm. Our proposed method demonstrated the superiority in the human pose estimation accuracy on the quantitative comparison. Furthermore, our proposed method reliably estimated the pose of the target person in practical settings such as target people occluded by a blanket and standing aid with the robot arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge