Multilingual Unsupervised Neural Machine Translation with Denoising Adapters

Paper and Code

Oct 20, 2021

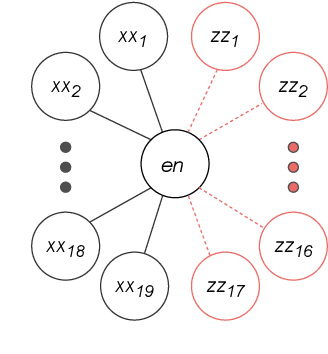

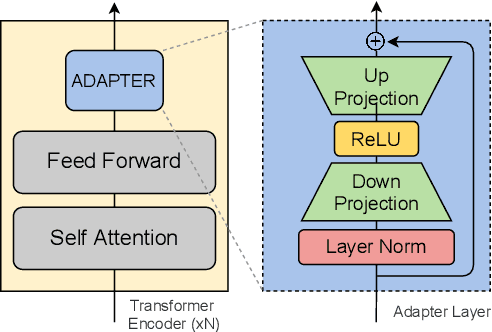

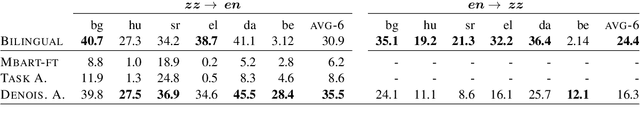

We consider the problem of multilingual unsupervised machine translation, translating to and from languages that only have monolingual data by using auxiliary parallel language pairs. For this problem the standard procedure so far to leverage the monolingual data is back-translation, which is computationally costly and hard to tune. In this paper we propose instead to use denoising adapters, adapter layers with a denoising objective, on top of pre-trained mBART-50. In addition to the modularity and flexibility of such an approach we show that the resulting translations are on-par with back-translating as measured by BLEU, and furthermore it allows adding unseen languages incrementally.

* Accepted as a long paper to EMNLP 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge