Multilingual Factor Analysis

Paper and Code

May 14, 2019

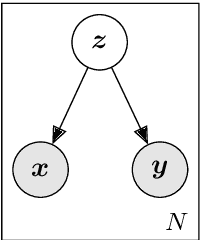

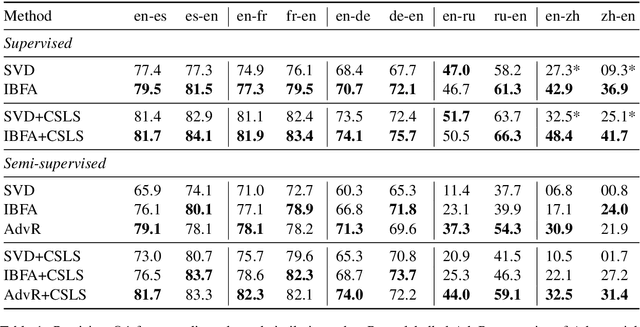

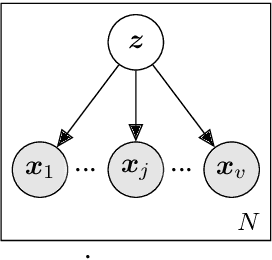

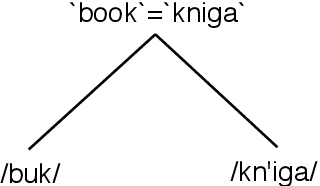

In this work we approach the task of learning multilingual word representations in an offline manner by fitting a generative latent variable model to a multilingual dictionary. We model equivalent words in different languages as different views of the same word generated by a common latent variable representing their latent lexical meaning. We explore the task of alignment by querying the fitted model for multilingual embeddings achieving competitive results across a variety of tasks. The proposed model is robust to noise in the embedding space making it a suitable method for distributed representations learned from noisy corpora.

* Proceedings of the 57th Annual Meeting of the Association for

Computational Linguistics

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge