Multi-view Contrastive Learning for Online Knowledge Distillation

Paper and Code

Jun 07, 2020

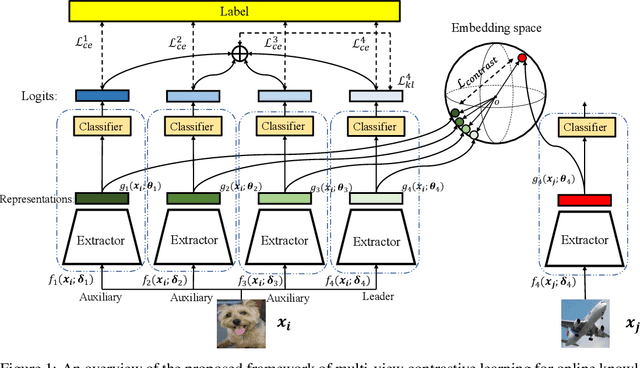

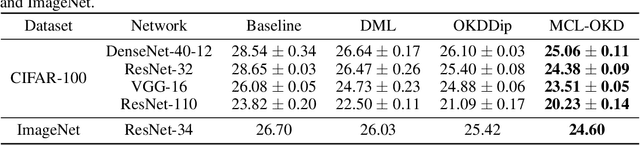

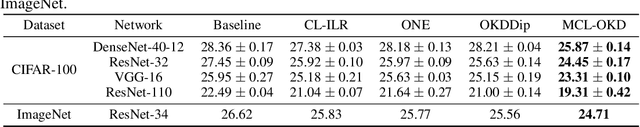

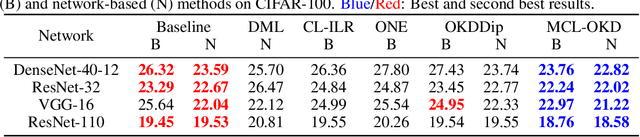

Existing Online Knowledge Distillation (OKD) aims to perform collaborative and mutual learning among multiple peer networks in terms of probabilistic outputs, but ignores the representational knowledge. We thus introduce Multi-view Contrastive Learning (MCL) for OKD to implicitly capture correlations of representations encoded by multiple peer networks, which provide various views for understanding the input data samples. Contrastive loss is applied for maximizing the consensus of positive data pairs, while pushing negative data pairs apart in embedding space among various views. Benefit from MCL, we can learn a more discriminative representation for classification than previous OKD methods. Experimental results on image classification and few-shot learning demonstrate that our MCL-OKD outperforms other state-of-the-art methods of both OKD and KD by large margins without sacrificing additional inference cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge