Multi-Task Network for Noise-Robust Keyword Spotting and Speaker Verification using CTC-based Soft VAD and Global Query Attention

Paper and Code

May 16, 2020

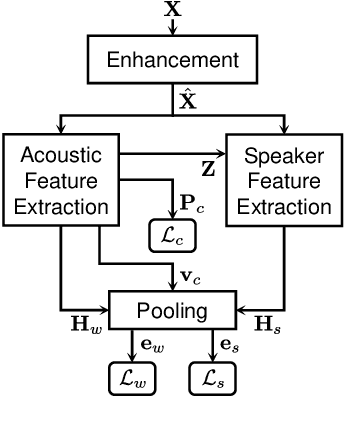

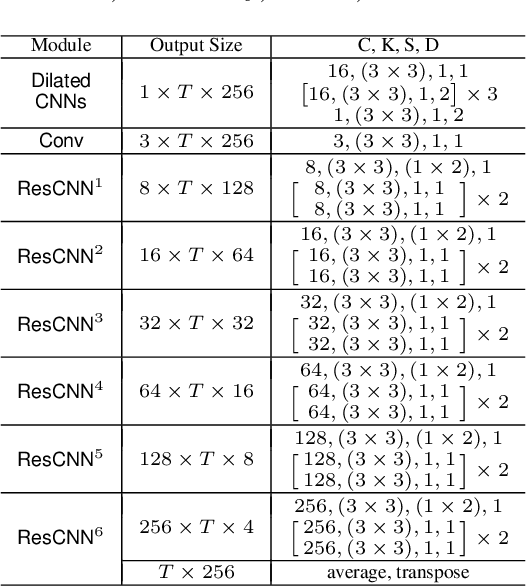

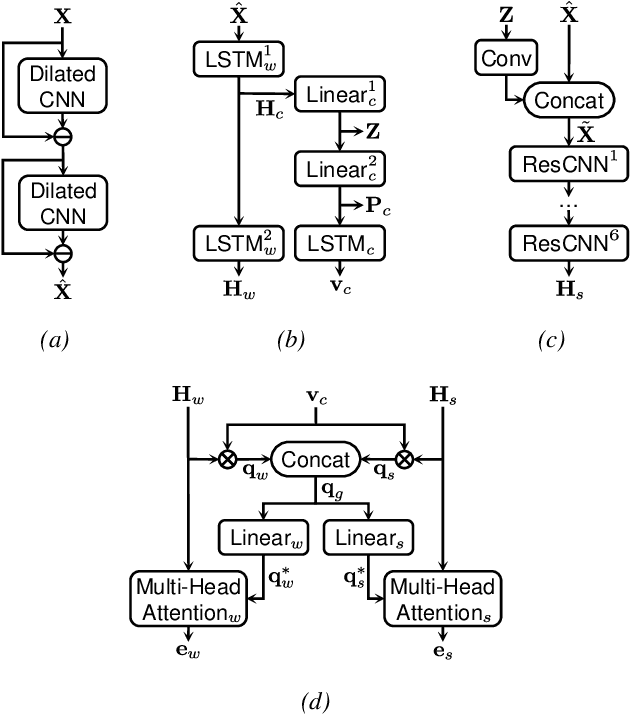

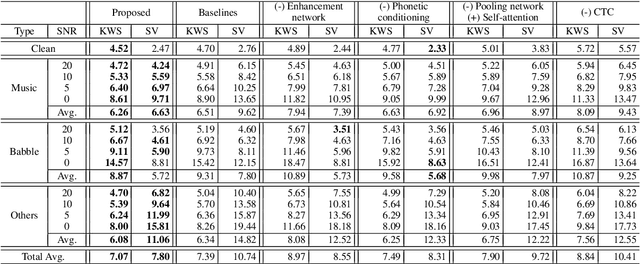

Keyword spotting (KWS) and speaker verification (SV) have been studied independently although it is known that acoustic and speaker domains are complementary. In this paper, we propose a multi-task network that performs KWS and SV simultaneously to fully utilize the interrelated domain information. The multi-task network tightly combines sub-networks aiming at performance improvement in challenging conditions such as noisy environments, open-vocabulary KWS, and short-duration SV, by introducing novel techniques of connectionist temporal classification (CTC)-based soft voice activity detection (VAD) and global query attention. Frame-level acoustic and speaker information is integrated with phonetically originated weights so that forms a word-level global representation. Then it is used for the aggregation of feature vectors to generate discriminative embeddings. Our proposed approach shows 4.06% and 26.71% relative improvements in equal error rate (EER) compared to the baselines for both tasks. We also present a visualization example and results of ablation experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge