Multi-fidelity Bayesian Optimization with Max-value Entropy Search

Paper and Code

Jan 24, 2019

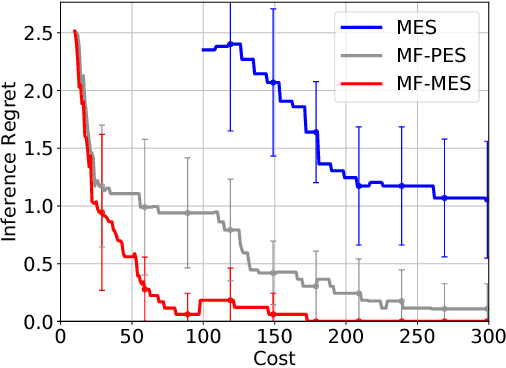

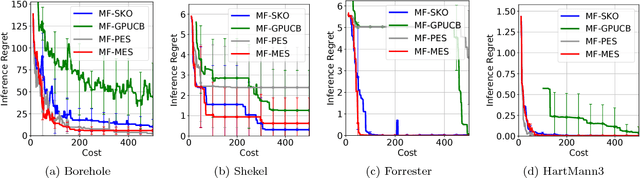

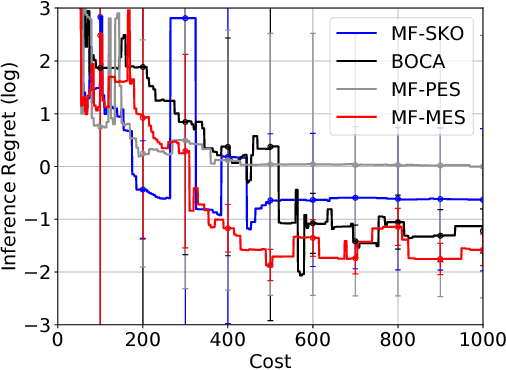

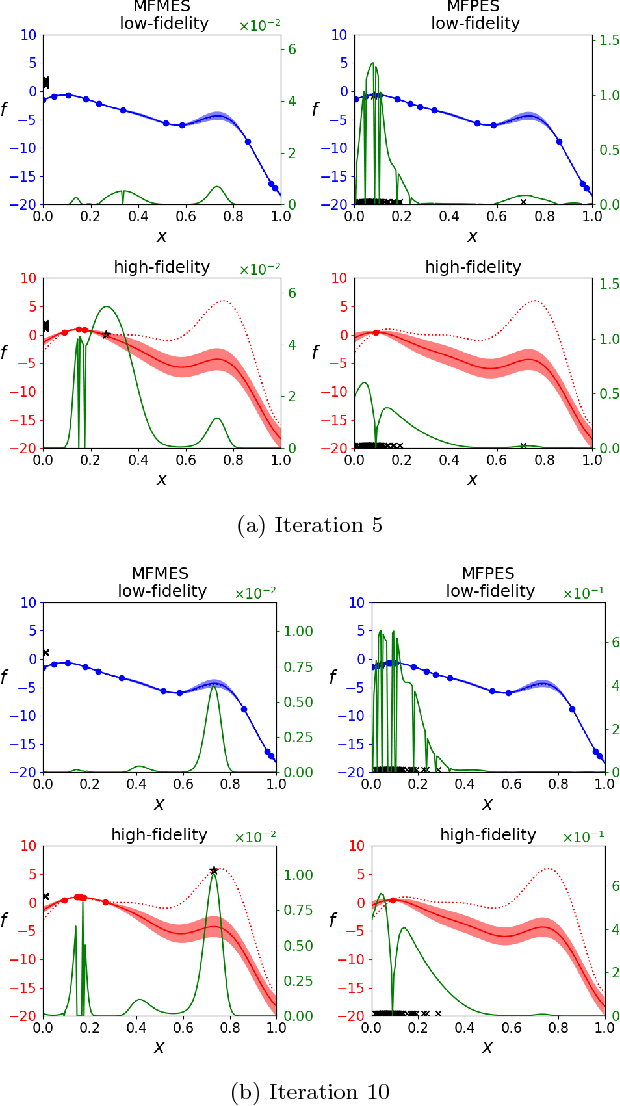

Bayesian optimization (BO) is an effective tool for black-box optimization in which objective function evaluation is usually quite expensive. In practice, lower fidelity approximations of the objective function are often available. Recently, multi-fidelity Bayesian optimization (MFBO) has attracted considerable attention because it can dramatically accelerate the optimization process by using those cheaper observations. We propose a novel information theoretic approach to MFBO. Information-based approaches are popular and empirically successful in BO, but existing studies for information-based MFBO are plagued by difficulty for accurately estimating the information gain. Our approach is based on a variant of information-based BO called max-value entropy search (MES), which greatly facilitates evaluation of the information gain in MFBO. In fact, computations of our acquisition function is written analytically except for one dimensional integral and sampling, which can be calculated efficiently and accurately. We demonstrate effectiveness of our approach by using synthetic and benchmark datasets, and further we show a real-world application to materials science data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge