Multi Agent Reinforcement Learning with Multi-Step Generative Models

Paper and Code

Jan 29, 2019

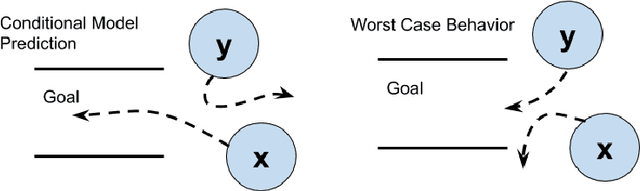

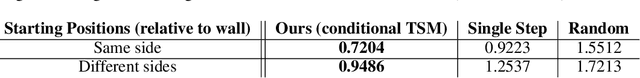

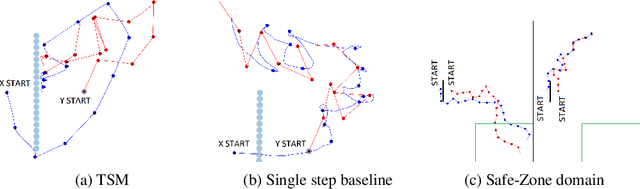

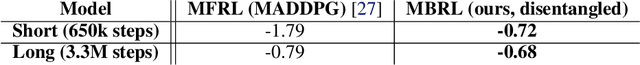

The dynamics between agents and the environment are an important component of multi-agent Reinforcement Learning (RL), and learning them provides a basis for decision making. However, a major challenge in optimizing a learned dynamics model is the accumulation of error when predicting multiple steps into the future. Recent advances in variational inference provide model based solutions that predict complete trajectory segments, and optimize over a latent representation of trajectories. For single-agent scenarios, several recent studies have explored this idea, and showed its benefits over conventional methods. In this work, we extend this approach to the multi-agent case, and effectively optimize over a latent space that encodes multi-agent strategies. We discuss the challenges in optimizing over a latent variable model for multiple agents, both in the optimization algorithm and in the model representation, and propose a method for both cooperative and competitive settings based on risk-sensitive optimization. We evaluate our method on tasks in the multi-agent particle environment and on a simulated RoboCup domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge