Modeling User Empathy Elicited by a Robot Storyteller

Paper and Code

Jul 29, 2021

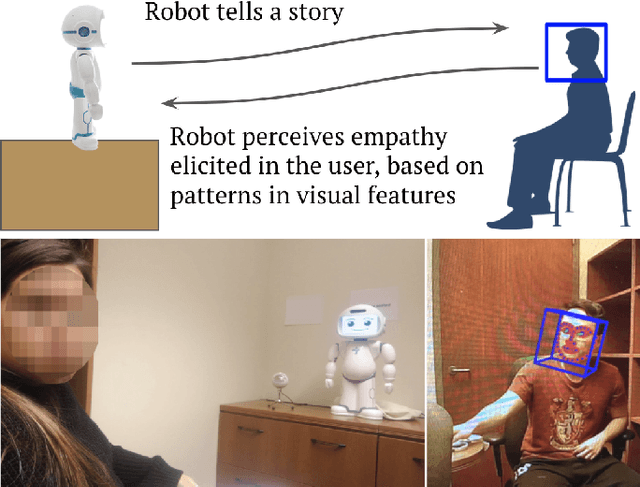

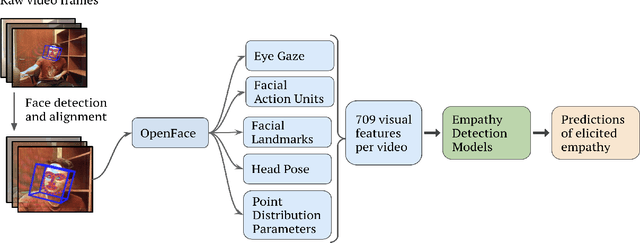

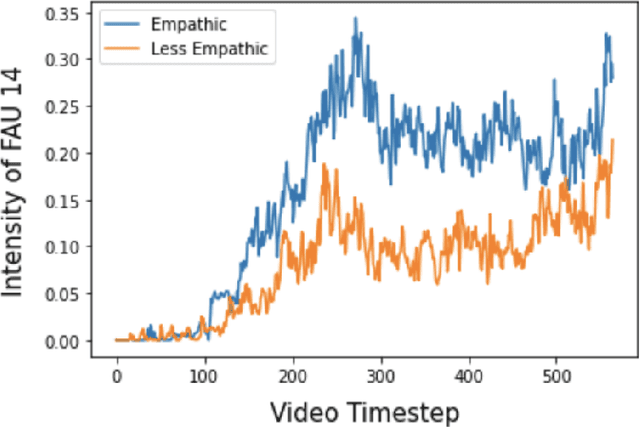

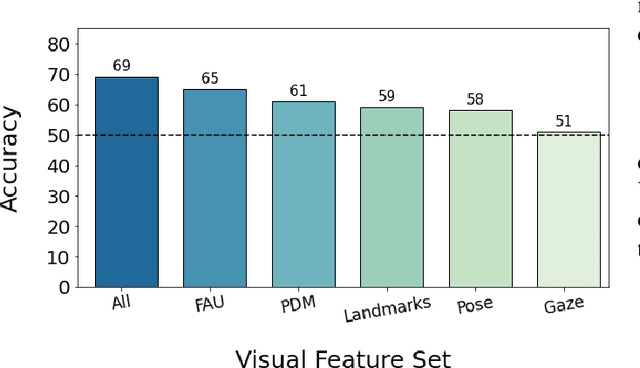

Virtual and robotic agents capable of perceiving human empathy have the potential to participate in engaging and meaningful human-machine interactions that support human well-being. Prior research in computational empathy has focused on designing empathic agents that use verbal and nonverbal behaviors to simulate empathy and attempt to elicit empathic responses from humans. The challenge of developing agents with the ability to automatically perceive elicited empathy in humans remains largely unexplored. Our paper presents the first approach to modeling user empathy elicited during interactions with a robotic agent. We collected a new dataset from the novel interaction context of participants listening to a robot storyteller (46 participants, 6.9 hours of video). After each storytelling interaction, participants answered a questionnaire that assessed their level of elicited empathy during the interaction with the robot. We conducted experiments with 8 classical machine learning models and 2 deep learning models (long short-term memory networks and temporal convolutional networks) to detect empathy by leveraging patterns in participants' visual behaviors while they were listening to the robot storyteller. Our highest-performing approach, based on XGBoost, achieved an accuracy of 69% and AUC of 72% when detecting empathy in videos. We contribute insights regarding modeling approaches and visual features for automated empathy detection. Our research informs and motivates future development of empathy perception models that can be leveraged by virtual and robotic agents during human-machine interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge