Modeling Feature Representations for Affective Speech using Generative Adversarial Networks

Paper and Code

Oct 31, 2019

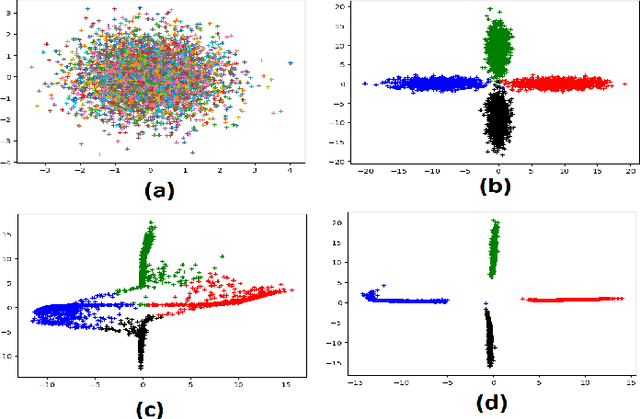

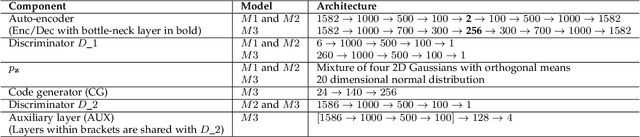

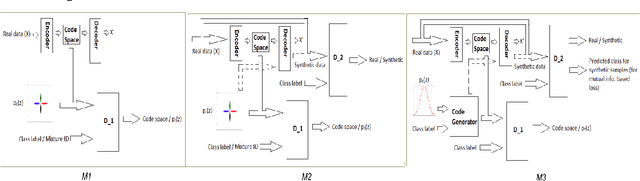

Emotion recognition is a classic field of research with a typical setup extracting features and feeding them through a classifier for prediction. On the other hand, generative models jointly capture the distributional relationship between emotions and the feature profiles. Relatively recently, Generative Adversarial Networks (GANs) have surfaced as a new class of generative models and have shown considerable success in modeling distributions in the fields of computer vision and natural language understanding. In this work, we experiment with variants of GAN architectures to generate feature vectors corresponding to an emotion in two ways: (i) A generator is trained with samples from a mixture prior. Each mixture component corresponds to an emotional class and can be sampled to generate features from the corresponding emotion. (ii) A one-hot vector corresponding to an emotion can be explicitly used to generate the features. We perform analysis on such models and also propose different metrics used to measure the performance of the GAN models in their ability to generate realistic synthetic samples. Apart from evaluation on a given dataset of interest, we perform a cross-corpus study where we study the utility of the synthetic samples as additional training data in low resource conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge