Model-agnostic bias mitigation methods with regressor distribution control for Wasserstein-based fairness metrics

Paper and Code

Nov 19, 2021

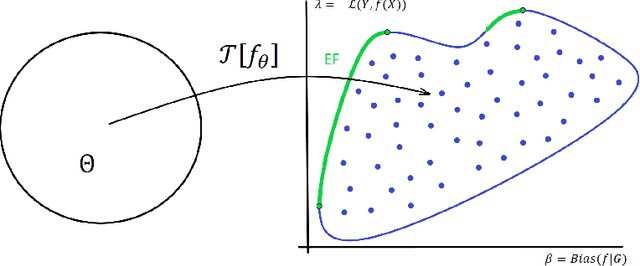

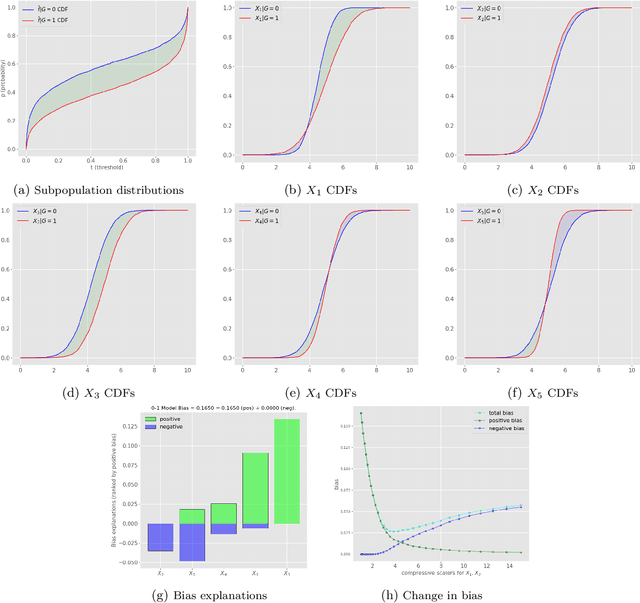

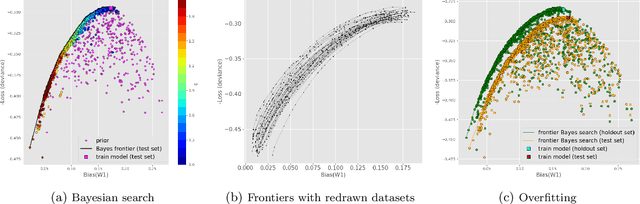

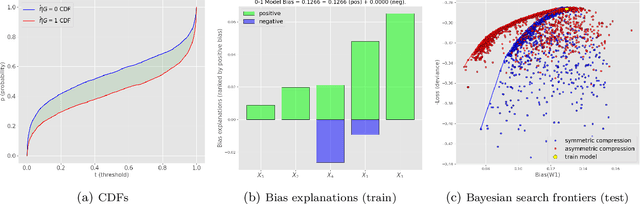

This article is a companion paper to our earlier work Miroshnikov et al. (2021) on fairness interpretability, which introduces bias explanations. In the current work, we propose a bias mitigation methodology based upon the construction of post-processed models with fairer regressor distributions for Wasserstein-based fairness metrics. By identifying the list of predictors contributing the most to the bias, we reduce the dimensionality of the problem by mitigating the bias originating from those predictors. The post-processing methodology involves reshaping the predictor distributions by balancing the positive and negative bias explanations and allows for the regressor bias to decrease. We design an algorithm that uses Bayesian optimization to construct the bias-performance efficient frontier over the family of post-processed models, from which an optimal model is selected. Our novel methodology performs optimization in low-dimensional spaces and avoids expensive model retraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge