Mixture of basis for interpretable continual learning with distribution shifts

Paper and Code

Jan 05, 2022

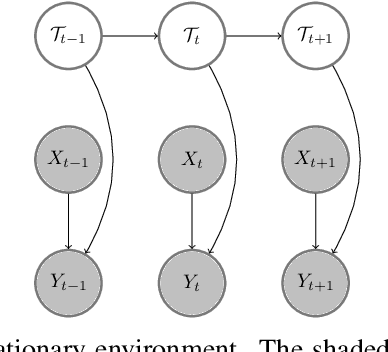

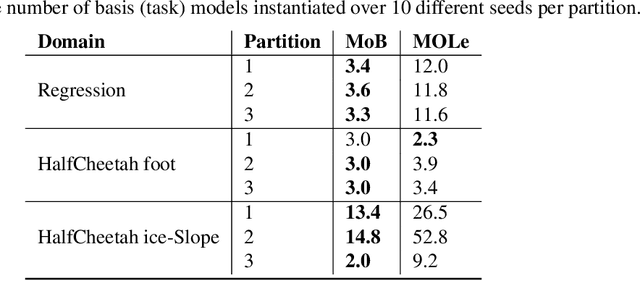

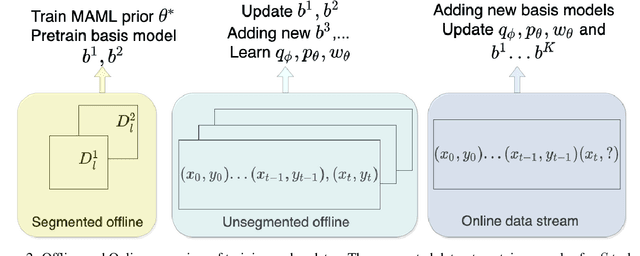

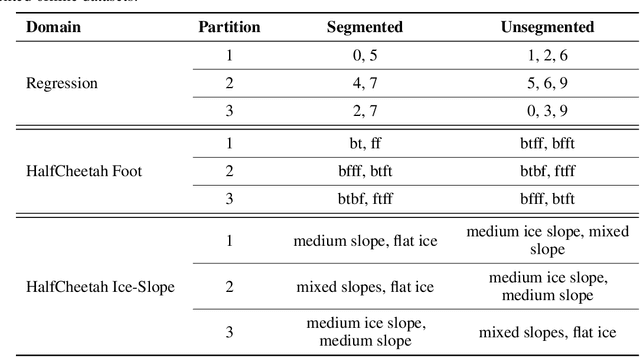

Continual learning in environments with shifting data distributions is a challenging problem with several real-world applications. In this paper we consider settings in which the data distribution(task) shifts abruptly and the timing of these shifts are not known. Furthermore, we consider a semi-supervised task-agnostic setting in which the learning algorithm has access to both task-segmented and unsegmented data for offline training. We propose a novel approach called mixture of Basismodels (MoB) for addressing this problem setting. The core idea is to learn a small set of basis models and to construct a dynamic, task-dependent mixture of the models to predict for the current task. We also propose a new methodology to detect observations that are out-of-distribution with respect to the existing basis models and to instantiate new models as needed. We test our approach in multiple domains and show that it attains better prediction error than existing methods in most cases while using fewer models than other multiple model approaches. Moreover, we analyze the latent task representations learned by MoB and show that similar tasks tend to cluster in the latent space and that the latent representation shifts at the task boundaries when tasks are dissimilar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge