Mining Unseen Classes via Regional Objectness: A Simple Baseline for Incremental Segmentation

Paper and Code

Nov 15, 2022

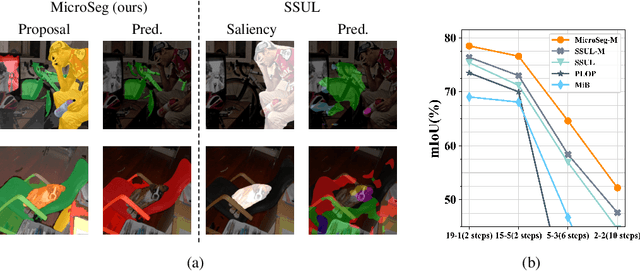

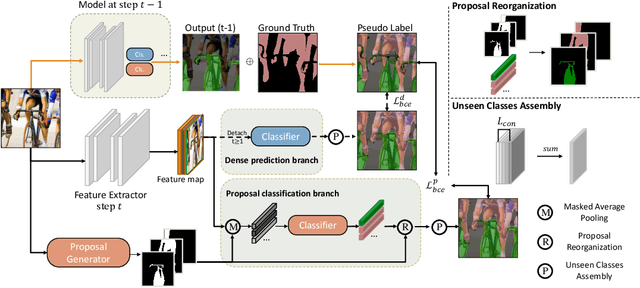

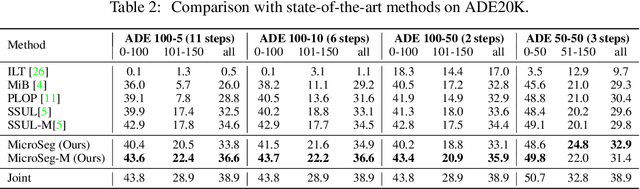

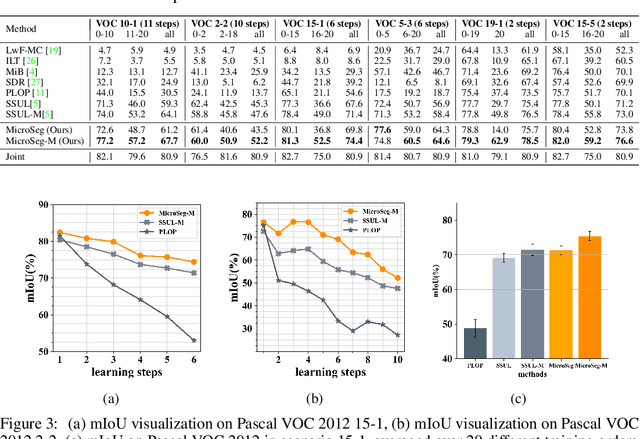

Incremental or continual learning has been extensively studied for image classification tasks to alleviate catastrophic forgetting, a phenomenon that earlier learned knowledge is forgotten when learning new concepts. For class incremental semantic segmentation, such a phenomenon often becomes much worse due to the background shift, i.e., some concepts learned at previous stages are assigned to the background class at the current training stage, therefore, significantly reducing the performance of these old concepts. To address this issue, we propose a simple yet effective method in this paper, named Mining unseen Classes via Regional Objectness for Segmentation (MicroSeg). Our MicroSeg is based on the assumption that background regions with strong objectness possibly belong to those concepts in the historical or future stages. Therefore, to avoid forgetting old knowledge at the current training stage, our MicroSeg first splits the given image into hundreds of segment proposals with a proposal generator. Those segment proposals with strong objectness from the background are then clustered and assigned newly-defined labels during the optimization. In this way, the distribution characterizes of old concepts in the feature space could be better perceived, relieving the catastrophic forgetting caused by the background shift accordingly. Extensive experiments on Pascal VOC and ADE20K datasets show competitive results with state-of-the-art, well validating the effectiveness of the proposed MicroSeg.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge