Mincut pooling in Graph Neural Networks

Paper and Code

Jul 11, 2019

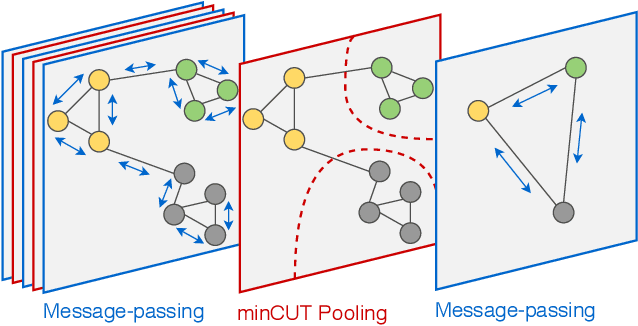

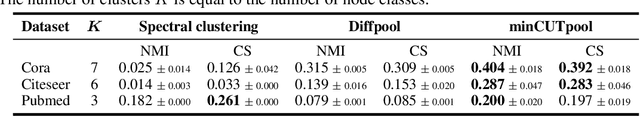

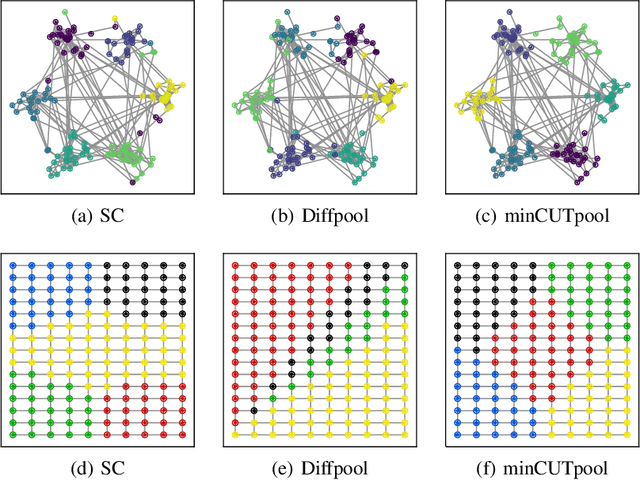

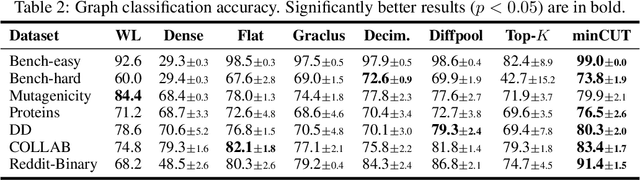

The advance of node pooling operations in Graph Neural Networks (GNNs) has lagged behind the feverish design of new message-passing techniques, and pooling remains an important and challenging endeavor for the design of deep architectures. In this paper, we propose a pooling operation for GNNs that leverages a differentiable unsupervised loss based on the mincut optimization objective. For each node, our method learns a soft cluster assignment vector that depends on the node features, the target inference task (e.g., graph classification), and, thanks to the mincut objective, also on the graph connectivity. Graph pooling is obtained by applying the matrix of assignment vectors to the adjacency matrix and the node features. We validate the effectiveness of the proposed pooling method on a variety of supervised and unsupervised tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge