MHAttnSurv: Multi-Head Attention for Survival Prediction Using Whole-Slide Pathology Images

Paper and Code

Oct 22, 2021

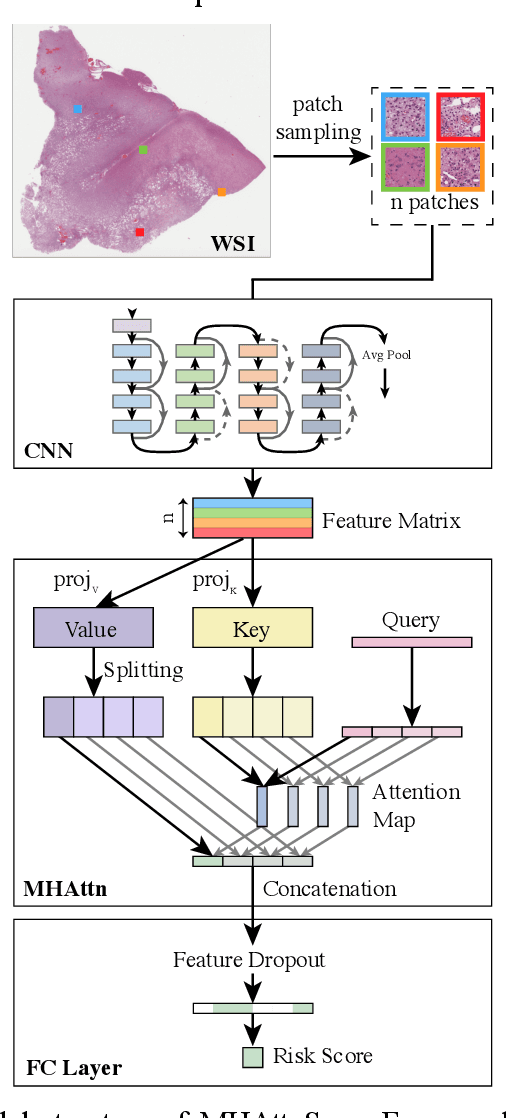

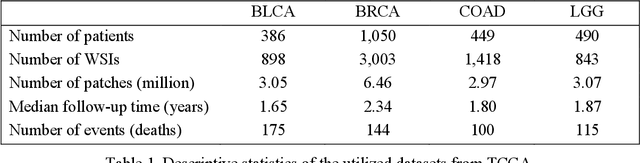

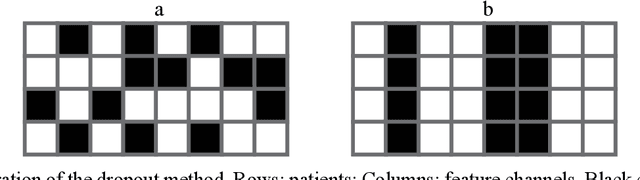

In pathology, whole-slide images (WSI) based survival prediction has attracted increasing interest. However, given the large size of WSIs and the lack of pathologist annotations, extracting the prognostic information from WSIs remains a challenging task. Previous studies have used multiple instance learning approaches to combine the information from multiple randomly sampled patches, but different visual patterns may contribute differently to prognosis prediction. In this study, we developed a multi-head attention approach to focus on various parts of a tumor slide, for more comprehensive information extraction from WSIs. We evaluated our approach on four cancer types from The Cancer Genome Atlas database. Our model achieved an average c-index of 0.640, outperforming two existing state-of-the-art approaches for WSI-based survival prediction, which have an average c-index of 0.603 and 0.619 on these datasets. Visualization of our attention maps reveals each attention head focuses synergistically on different morphological patterns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge