Meta Learner with Linear Nulling

Paper and Code

Aug 07, 2018

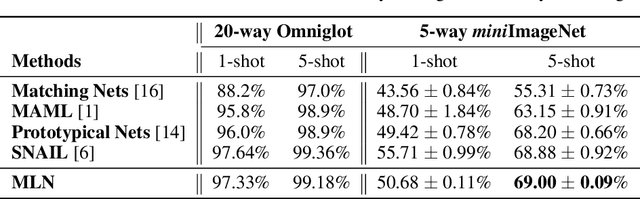

We propose a meta learning algorithm utilizing a linear transformer that carries out null-space projection of neural network outputs. The main idea is to construct a classification space such that the error signals during few-shot training are zero-forced on that space. The final decision on a test sample is obtained utilizing a null-space-projected distance measure between the network output and label-dependent weights that have been trained in the initial meta learning phase. Our meta learner achieves the best or near-best accuracies among known methods in few-shot image classification tasks with Omniglot and miniImageNet. In particular, our method shows stronger relative performance by significant margins as the classification task becomes more complicated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge